Our company specializes in developing cutting-edge 360-degree imaging solutions tailored to the automotive industry. By leveraging our expertise in software development and integrating state-of-the-art technology, we have designed a comprehensive system for capturing high-quality 360-degree images of vehicles. This whitepaper delves into the technical aspects of our system, highlighting its capabilities, components, and potential applications within the automotive sector.

System Overview

The primary goal of our 360-degree imaging solution is to create an immersive visual experience for users, allowing them to explore a vehicle from every angle. To achieve this, our system incorporates advanced image capture and processing techniques, powered by the latest iOS and Android technologies. The key components of our system include:

- High-resolution image capture with accurate spatial coordinates

- Flexible data storage for seamless integration with mobile and web platforms

- Custom player support for iOS, Android, and web-based applications

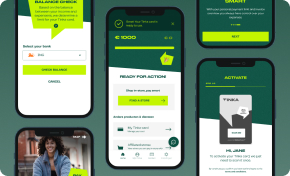

Invest in Professional UX/UI Design to Create Impactful Interfaces

Designing Seamless User Journeys That Boost Interaction and Conversion Rates

Discover UI/UX DesignData Gathering and Processing

Our 360-degree imaging system utilizes a combination of device cameras and motion sensors to collect high-quality images and positioning data. The camera captures images at specific locations, while the motion sensors track the device’s movement around the vehicle. This data is then analyzed and processed to generate a comprehensive 360-degree view of the vehicle.

To ensure optimal performance, our system relies on two primary frameworks:

- AVFoundation: This framework supplies visual data from the device’s camera, enabling high-resolution image capture and real-time preview. AVFoundation processes the captured images using framebuffers for increased efficiency and minimal processing overhead.

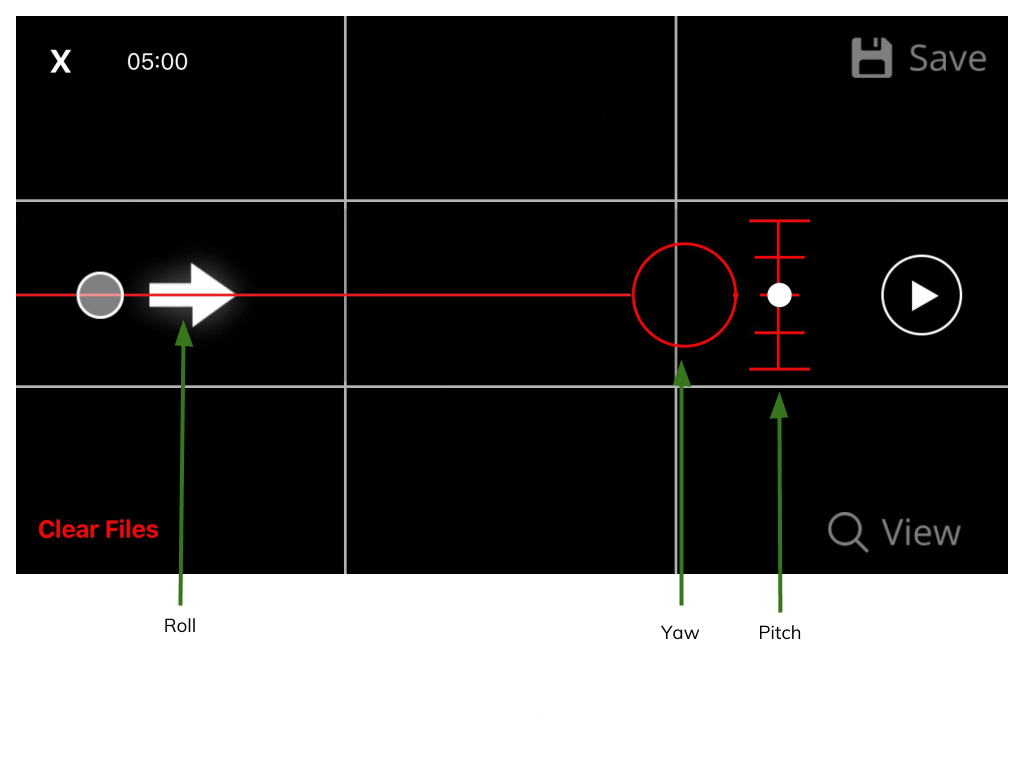

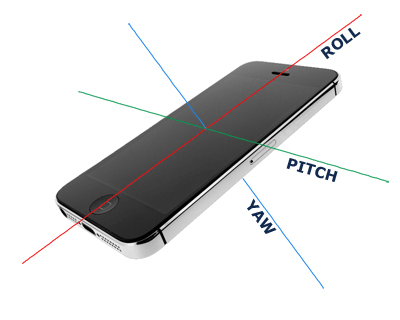

- CoreMotion: This framework provides data on the device’s motion and rotation in space, allowing our system to track its position around the vehicle accurately. CoreMotion monitors three axes of interest: pitch, roll, and yaw. Depending on the device orientation, two axes are used for adjustments, while one axis provides the angle.

Synchronization and Data Structure

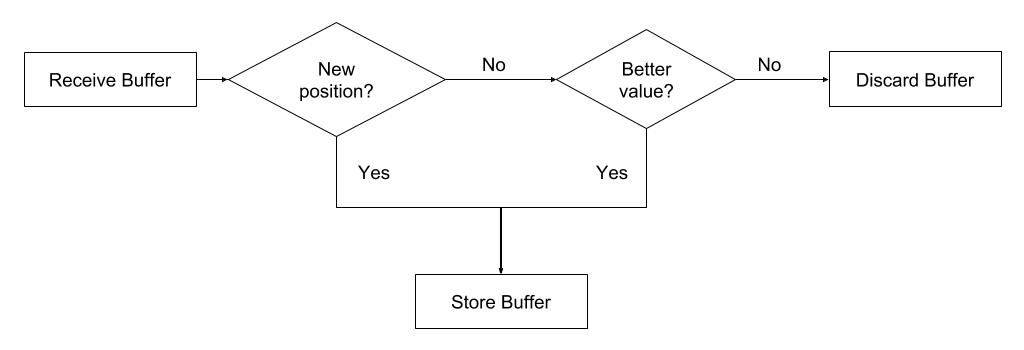

Our system synchronizes the image data from AVFoundation with the positioning data from CoreMotion to create a seamless 360-degree view. This process involves discarding low-quality images or images with insufficient positioning data, ensuring that the final result is composed of high-quality, well-positioned images.

The data structure for each 360-degree image capture includes:

- Unique identifier (UID)

- Timestamp (Unix time)

- Radius (optimal distance between images)

- List of elements, with each element containing image data, angle, time, deviation pitch, deviation roll, and acceleration

Exporting and Viewing the 360-Degree Image Capture

The system exports the 360-degree image captures as a JSON file, accompanied by a series of images. During the export process, images are reordered according to angles rather than time, which helps account for any gaps in the image sequence. The final images are then loaded from the disk and displayed in order, providing a seamless 360-degree viewing experience.

Fine Adjustments and Known Issues Our system incorporates several fine adjustments to optimize performance, such as preventing CoreMotion floods, handling data on separate threads, and calibrating buffer values. However, there are some limitations, such as the inability to compensate for vertical movement of the device. Using a gimbal device is recommended for achieving the best image quality.

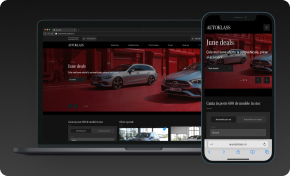

Practical Applications for the Automotive Industry

Our custom 360-degree imaging solution offers numerous applications within the automotive industry, including:

- Enhancing e-commerce and m-commerce platforms by providing immersive product presentations

- Offering virtual tours and interactive experiences for potential customers

- Integrating with social media and marketing campaigns to showcase vehicle features

- Incorporating the technology into automotive design, development, and manufacturing processes

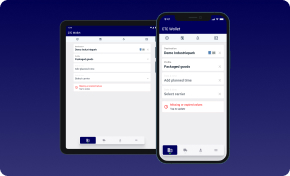

Web Development for a Strong Digital Foundation

Build a Robust Online Platform with Our Expertise

Discover Web DevelopmentConclusion

Our company’s 360-degree imaging system for the automotive industry is a powerful and versatile tool that can revolutionize the way vehicles are presented, marketed, and experienced by customers. By leveraging our extensive software development expertise and utilizing cutting-edge iOS and Android technologies, we have created a solution that delivers high-quality, immersive visuals tailored to the unique requirements of the automotive sector.

We are committed to continuously refining and expanding our system to incorporate new technologies, address any known issues, and respond to the evolving needs of the industry. By partnering with us, automotive businesses can enhance their digital presence, attract and engage customers, and ultimately drive sales.

IT Consultancy for Strategic Advantage

Tailored IT Solutions to Drive Your Business Forward

Discover IT ConsultingFor more information about our 360-degree imaging solution for the automotive industry, or to discuss how we can help you create a customized solution that meets your specific needs, please contact us. We look forward to collaborating with you to create the next generation of immersive automotive experiences.