Genomic data has exploded to petabyte scales, forcing contemporary genetic testing laboratories to rethink their entire IT infrastructure. The avalanche of DNA-sequencing output demands reliable, expandable analysis frameworks. Forward-thinking labs are investing in high-performance computing (HPC) clusters and cloud platforms to turn this big genomic data into time-efficient, actionable findings. This brief explains the magnitude of the data surge, outlines an advanced bioinformatics-pipeline framework, and shows how customised software delivers cost-efficient scalability. HyperSense Software partners with genetic testing labs to streamline pipelines, integrate cloud solutions, and accelerate research.

Redefine Your Business with Digital Transformation

Innovative Digital Solutions, Designed for Your Unique Needs

Explore Digital TransformationThe Genomic Data Deluge

Genetic information grows rapidly in size. The data output from sequencing one human genome reaches approximately 100 gigabytes per individual. The decreasing cost of sequencing allows laboratories to carry out genome sequencing at $600 per sample, thus they can process an unprecedented volume of samples. Large-scale genomic sequencing activities like the UK Biobank produced 27.5 petabytes of data by analyzing 500,000 individual genomes. The deluge of genomic data set to reach exabyte capacity within five years exceeds annual YouTube data consumption by an expected margin. The abundance of genetic data poses advantages and significant technical difficulties for lab executives regarding data management, speed, and infrastructure capabilities.

Operating enormous data sets through a conventional lab information technology infrastructure becomes impossible to handle. The terabytes-per-run data output from modern sequencers exceeds the capabilities of most available on-premises servers. Due to these circumstances, labs must transform their entire technological framework. High-Performance Computing (HPC) clusters and cloud platforms have become essential for genomic research facilities. Even exceptional laboratories cannot provide timely patient service or scientific research assistance when they fail to invest in flexible data handling systems.

For a detailed explanation of how cloud-based infrastructure supports genomics data processing, see Leveraging AWS for Scalable Genomics Data Storage and Processing.

HPC and Cloud: The Backbone of Genomic Analysis

Processing petabyte-scale genomic information requires genetic testing labs to adopt HPC and cloud computing systems as their base infrastructure. Labs benefit from high-performance computing technology through either built-in clusters or cloud-based server systems to execute complex analysis parallelized across big data processing. Genome pipelines need multiple CPU hours to complete according to standard methods. Yet, HPC technology allows such tasks to finish within hours or minutes of the time initially required on single machines. Implementing GPU-accelerated computing decreased DNA analysis times substantially to levels between 40–60 times faster than standard methods. Through HPC labs, gain quicker results and the opportunity to process multiple samples simultaneously.

The cloud operates as an efficient solution to obtain HPC scalability without requiring hardware maintenance expenses. The cloud platforms of AWS, Google Cloud, and Microsoft Azure present dedicated resources for genomics that enable users to access limitless computing power and storage through on-demand provisioning. This flexibility is game-changing. When sequencing operations reach their peaks, labs enhance their computational strength but can reduce their usage later and only pay for actual consumption. Genomic data storage needs are easy to handle by cloud solutions since Amazon S3 can store massive petabyte-scale genomic files with a durability level of 99.999999999%. According to a related industry assessment, implementing HPC systems within internal facilities is expensive to operate and difficult to maintain. At the same time, genomics functions optimally with cloud-based infrastructure, which fulfills its speed and large data management requirements.

Experience the Power of Custom Software Development

Transformative Software Solutions for Your Business Needs

Explore Custom SoftwareCloud HPC delivers essential capabilities to executives because it provides dependable service with secure environments and enables worldwide scientific cooperation. Cloud providers maintain rigorous security protocols that secure patient genetic information alongside multiple disaster recovery sites across different geographical regions. A lab can dedicate its efforts to scientific work when the cloud vendor operates server management tasks in the background. “A properly designed cloud pipeline shifts server management responsibilities to the provider, allowing your team to focus on scientific development rather than infrastructure maintenance,” notes a HyperSense Software cloud whitepaper. The mentioned source notes that cloud architecture optimization delivers faster data processing times for diagnosis and research discoveries and automatic scalability benefits.

Real-world examples underscore these gains. The migration of genome analysis workflows to AWS by Theragen Bio reduced the standard data processing time from 40 hours to only 4 hours, thus achieving tenfold faster performance. Through cloud processing, the company achieved analysis of 400 genomes in parallel, which presented no challenges in the cloud environment, although it would have been difficult on-premises. The genomics organization achieved a 60% reduction in cloud computing spending for each run after optimizing its AWS pipeline through modifications in data path management and storage approach adjustments. The real return on investment becomes evident through HPC and cloud computing investments when they enable faster results delivery, handle more tests, and lead to reduced costs per analysis at maximum scale.

Web Development – The Ultimate Guide for Businesses explores the value of tailored front-end and back-end systems, especially in industries with data-intensive needs like healthcare.

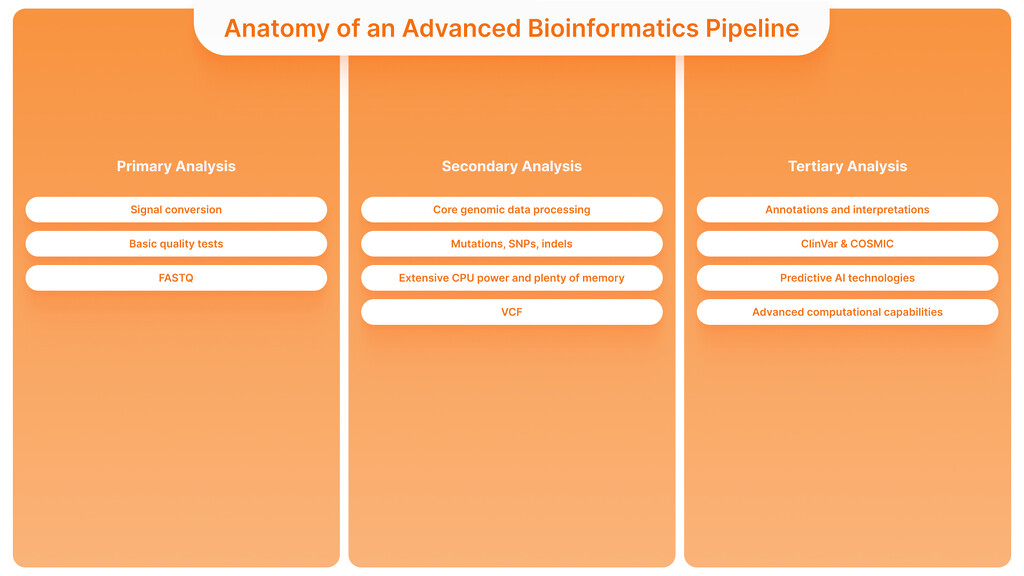

Anatomy of an Advanced Bioinformatics Pipeline

Every genetic testing laboratory uses a bioinformatics pipeline that transforms unprocessed sequencing data into valuable genetic findings. A bioinformatics pipeline is “a series of software algorithms that transform raw sequencing data into interpretations derived from this data”. The modern genetic testing procedure consists of multiple comprehensive stages that require heavy computational processing, which experts describe through three main phases:

- Primary Analysis: The first processing stage processes instrument output through a series of operations, including signal conversion to sequence reads and basic quality tests. As a result, the sequence data files (FASTQ, etc.) become available for downstream analysis.

- Secondary Analysis: Core genomic data processing occurs in the second phase through sequence alignment and variant calling operations. The algorithms in this stage align millions or billions of DNA reads to a reference genome while detecting genetic variants (mutations, SNPs, indels) in the sample. The variant calling process requires extensive CPU power and plenty of memory, which depends on high-performance computing systems to process data rapidly. Every analyzed sample generates a variant list in VCF format as the output.

- Tertiary Analysis: The third phase includes annotation and interpretation before preparing final reports. Medical and scientific significance analysis takes place on the unmodified variant list. Medical institutions query databases and knowledge bases, including ClinVar and COSMIC, to obtain information about variant disease associations and frequencies. Advanced laboratories utilize predictive AI technologies that determine the effects of new variants and establish the significance of the findings. Results are organized into readable clinical reports, which laboratory information systems can incorporate for clinical staff access. The final step demands advanced computational capabilities for running interpretation software and a user-friendly software design for clear output presentation.

Modern pipelines function as much more than basic tools since they represent orchestrated automated workflows. Once the sequencer meets processing completion for a sample batch, an automated pipeline starts robotically by triggering the storage of raw data files into cloud storage, which begins the alignment process, followed by variant calling tasks until all steps are finished. The automated character of event-driven workflow pipelines prevents delays from human-intervention needs while minimizing human-caused errors by eliminating manual operations. The multi-step process management relies mainly on workflow frameworks, including Cromwell/WDL, Nextflow, and Snakemake, alongside cloud services that consist of AWS Step Functions and AWS Batch. A high-speed genetic insight production facility emerges from this approach because data flows from input to output through a predefined sequence of computational transformations. The pipeline management for lab executives enables automated, reproducible processes that operate continuously without much supervision while scaling their operations through demand fluctuations.

Automation and ROI: The Business Case for Investment

Strategic investment in data analysis pipelines, combined with bioinformatics capabilities, brings substantial ROI to genetic testing labs through its business-oriented decision. Workflow automation, custom software development, and bioinformatics integration also bring competitive advantages to businesses.

Faster Turnaround Times

High-performance automated pipelines reduce the time needed to process samples until results are produced. The speed of test outcomes enables medical professionals and patients to obtain responses quickly, so that laboratories can manage more weekly cases. The addition of automation and cloud scaling allows laboratories to deliver their results within hours instead of the previous days. The speed of clinical genomics testing outcomes increases patient healthcare benefits through rapid genomic analyses performed in NICUs and oncology wards. The speed of service delivery to customers builds trust levels and market competitiveness.

Higher Throughput & Scalability

The combination of advanced pipeline features allows concurrent sample processing at maximum capacity through cloud elastic systems. A laboratory can expand testing operations without needing proportional growth of personnel or facility space. The capability of analyzing hundreds of genomes simultaneously allows Theragen Bio to win major research contracts and large projects that otherwise could not be handled by smaller laboratories. The emerging big pharmaceutical collaborations and nationwide population genomics projects demand labs with scalable infrastructure to participate in and generate profits.

Experience Expert IT Consultancy

Transformative Strategies for Your Technology Needs

Discover IT ConsultingCost Efficiency at Scale

HPC infrastructure and pipeline development investments, which start at a high level, prove cost-effective because they generate operational savings. Automating testing processes leads to shorter labor requirements for each analysis (the savings can be used to lower staff expenses or to let experts dedicate time to interpretation instead of manual data processing). Cloud-based pipelines remove the requirement of significant initial hardware expenses and reduce operational server maintenance since labs pay by usage and can adjust their resource utilization to manage payments. Optimized Cloud workflows can potentially lower computing costs for each run by more than 50% by effectively managing resources. Automatic error detection within pipelines alongside quality consistency enables laboratories to prevent costs associated with rerunning assays and troubleshooting errors after completion.

Improved Accuracy & Consistency

Bioinformatics software developed specifically for the laboratory enables standardized quality checksthroughout all laboratory analysis procedures. The reduction of human errors, along with consistent results, becomes achievable through this system implementation. Accredited labs require consistent operation because automated pipelines execute their validated procedures identically at every run, thus enabling both compliance with CAP/CLIA standards and test audit trail generation. Installing sturdy pipelines enhances quality standards, reducing the need for expensive rework or correction.

Strategic Flexibility and Innovation

When informatics systems are properly integrated, a laboratory can easily implement new technologies. A modular pipeline design allows the incorporation of new variant interpretation AI tools and sequencing platforms without needing extensive pipeline reconstruction. Data collection allows laboratories to establish variant and outcome data lakes for secondary analysis, enabling research and machine learning model development and new service creation. A data pipeline-optimized lab can use its structured information to develop high-value services such as drug discovery partnerships and personalized medicine solutions to create new revenue streams.

Lab executives who need to determine ROI should understand that investing in enhanced data pipelines and HPC/cloud infrastructure generates immediate profit alongside long-term market advantage. Current profitability and customer retention levels improve through accelerated processing, but the foundation for future growth and innovation emerges from operational scalability. Labs that establish automated genomic pipelines for optimized data processing will navigate the petabyte expansion successfully since laboratories without these capabilities face potential data-management challenges.

For a closer look at operational enhancements through automation, read Unlocking Business Efficiency with Intelligent Document Processing (IDP) on AWS.

HyperSense Software: Your Partner in Bioinformatics Innovation

The approach to sophisticated pipeline and cloud architecture implementation may be challenging. Still, HyperSense Software is an ISO-certified solution provider that creates custom business-oriented software from advanced technology ideas. Our team produces “tailor-made software applications designed specifically for your business processes, enabling you to innovate faster and achieve greater efficiency”. The genetic testing lab industry will benefit significantly from our expertise in creating and implementing the most appropriate data analysis systems and platform infrastructure that meets your needs.

Leading Research & Development for Your Success

Driving Innovation in Every Product Aspect Through R&D-Driven Software

Learn About R&D ServicesHyperSense has built strength in integrating cloud technologies with high-performance computing orchestration and genetic data workflow automation. Our specialists on the team maintain a detailed understanding of genomic information and security practices, together with healthcare IT regulatory needs. Our cloud-based platform helps biotech leaders and researchers analyze and process big genomic datasets and provides secure storage facilities at reduced operating costs, which follow necessary regulations. Using AI analytics with event-driven frameworks enables labs to boost their genomic research speed, acquiring actionable results from complicated genomic datasets. Your team can leverage HyperSense as an additional resource to establish AWS-based genomics pipelines, optimize parallel processing workflows, and link pipeline results between your Laboratory Information Management System (LIMS).

Our Approach

We operate as a team to accomplish defined results. The initial step requires us to evaluate the present processes and their pain points, like how extended analysis times create a bottleneck for your operations. Is data management becoming unwieldy? The availability of new test possibilities draws your attention because you need informatics assistance for their execution. We build the solution based on assessments from the starting point. The implementation typically requires building a cloud data lake to store sequencer outputs and creating a workflow automation system to convert raw data into reports while developing dedicated software components for variant analysis and results presentation. Our focus remains on ROI and strategic value because we aim to provide a solution that functions effectively and generates tangible bottom-line advantages from operational efficiency increases and new operational possibilities. As our client, you receive multiple long-term advantages by partnering with HyperSense, which delivers knowledge applications to your organization’s staff and continuous support that evolves with your business requirements.

HyperSense Software provides a solution for your lab to optimize extensive genomic data and turn it into efficient analysis pathways. We combine our expertise in cloud technology and bioinformatics with business-oriented services to ensure the solutions optimally align with your strategic business requirements. The genomic testing domain is progressing rapidly because data amounts keep growing and analytical approaches continue to evolve. Your laboratory can lead the current industry revolution through strategic investments in data analysis pipelines and strategic partnerships. Through its guidance, HyperSense supports laboratories in converting petabyte datasets into accessible knowledge while transforming complex programs into simple solutions, which enables faster delivery of modern scientific results.

Actionable Insight

Executives within genetic testing must initiate actions at this present time. Review your present data pipeline system to determine missing elements. Assess the upcoming data quantity your operations will manage over the next 3-5 years, then check if your current infrastructure meets future needs. You should examine HPC and cloud options and find expert partners to help you carry out this transformation if your current infrastructure falls short. Labs that effectively implement advanced bioinformatics systems in the present day will establish themselves as the leaders of genomic medicine in the coming years.

Discover Our Development Teams

Boost Your Projects with Expert Software Development Teams

Get Your Development TeamContact us today to discuss your journey toward the future.