Strategic tech management lives by its Key Performance Indicators (KPIs). With the age of accelerated innovation, including generative AI, edge, and quantum, one question that tech executives and product managers should ask is: What do we learn to determine whether the technologies provide actual business value? This can be answered with the kind of KPIs that are defined and measured. As the saying goes, you cannot manage what you cannot measure, and in the case of emerging technologies, this is correct. In this briefing, of a newsletter nature, we will look at the most applicable and useful KPIs in AI and other emerging technologies, why they are essential to business strategy, and how to measure and optimize them.

The contemporary companies are adopting generative AI, implementing edge computing infrastructure, and accessing quantum computing breakthroughs. However, as per the industry research, most leaders are still not on the same page regarding how success in such areas can be measured. The new tech KPI landscape is not universal. However, without proper measurements, technological investments will be under- or misinvested, which wastes resources and results in lost opportunities. This article will help you cut through the hype and focus on KPIs that matter to quantify AI and emerging tech projects in clean business terms.

Begin Your Digital Transformation Journey

Customized Strategies to Lead Your Business into the Digital Age

Explore Digital TransformationWhy KPIs Matter for AI and Emerging Tech

It is easy to lose oneself in the experiment and technical barriers when introducing innovative technology. KPIs represent the connection between technical performance and business results. They communicate difficult technological development into proof of worth to stakeholders. This must line up: executives must be able to view how an AI model or edge deployment will impact customer experience, efficiency, or revenue.

Thought-leaders stress the application of two groups of measures: technology-aligned KPI and business-aligned KPI. In practice, this is equivalent to monitoring both technical (e.g. model accuracy, system uptime) and business (e.g. cost savings, user adoption, revenue growth) performance. We require both of the views. An AI system could achieve a 99% technical KPI of accuracy, but that would not be very useful unless it generates higher customer satisfaction or conversion rates (business KPIs).

In addition, KPIs create accountability. They ensure that new technological projects are not based on empty promises of success. An AI activation leader at Deloitte said that in the rapidly changing environment of generative AI, it is important to establish KPIs early, as without them, organizations would not be able to track value directly and justify business continuation. In a nutshell, that which is measured is managed, and eventually, it is funded.

AI Integration for Transformative Results

Amplify Your Business Potential with Our AI Expertise

Access AI Integration SolutionsLastly, KPIs are essential to agility. Teams can adjust based on data by assessing the crucial metrics. When a generative AI pilot is not reaching its intended user engagement levels or an edge computing deployment is not decreasing latency as planned, KPIs that are tracked in a timely manner will identify these shortcomings. This helps to correct the course before minor problems turn into major failures. Competitive insight into real-time KPIs is competitive in an environment where technology (and competitors) are fast-paced.

For an excellent operational playbook on deploying AI at scale, see AI Integration for Business: Practical Steps to Implement and Scale.

KPIs for AI and Generative AI: Focusing on Value and Adoption

The artificial intelligence project, in particular, the application of generative AI, needs a balanced scorecard of KPIs that would consider the model’s performance and business value. These are some of the most pertinent KPIs that tech leaders might want to focus on regarding AI initiatives:

Model Performance and Quality

In the office of the executive, one should also monitor the effectiveness of AI models at the basic task. In the case of predictive models, accuracy, precision/recall, or F1-score show the extent to which the AI achieves its purpose. In generative AI, the accuracy concept does not apply as well, so the quality is measured through human feedback or AI-based assessors on such aspects as coherence, relevance, and safety. In this case, high-performance KPIs guarantee that the output of the AI is satisfactory and of good quality (e.g., an AI chatbot providing the appropriate answers, or a generative model that produces quality text).

User Adoption and Engagement

The success of Generative AI depends on human adoption, i.e., if users or employees do not use the AI tools, even the best model will be rendered meaningless. The main KPIs that should be tracked are adoption rate (the proportion of target users who actively use the AI tool) and frequency of use (the number of times the users engage with the AI). For example, how many customer service requests are processed via an AI assistant, or how many product managers actively utilize a generative AI feature in their day-to-day operations? An increasing adoption rate indicates that the AI solution is being adopted and adopted into regular practice. In contrast, a decline could point to a usability or trust problem that requires resolution.

Efficiency and Productivity Gains

Among the biggest promises of AI is that it can do more with fewer. Efficiency gains are hence essential KPIs. This may consist of time saved on work (e.g. average document processing time before vs after AI), the percentage of work done automatically (e.g. what proportion of content or customer support tickets are being done with AI without human involvement), and the cost per output (e.g. cost per piece of content produced by AI vs by a human). In the case of generative AI as it applies to content creation, efficiency of content (how fast or cheaply new content is created) or the cost per asset created is a reasonable KPI that directly relates to ROI. If an AI content generator can create marketing copy 3x faster, it must result in cost savings on content creation or extra content volume, which you should also measure in your KPI dashboard.

Customer Satisfaction and Quality Impact

Finally, the new technology must enhance the customer experience and the quality of products. KPIs such as Customer Satisfaction Score (CSAT) or Net Promoter Score (NPS) specific to AI-driven interactions are becoming more topical. For example, the question of AI-driven CSAT, or whether customers are equally satisfied with communicating with an AI chatbot instead of a human agent, can indicate whether the AI is actually providing value. Likewise, quality measures on generative AI content (perhaps through user ratings or error rates in AI output) can ensure that efficiency does not take a toll on quality. If customer satisfaction declines due to AI-written responses, it is an indicator that the KPIs will emerge. Other organizations are going as far as tracking “thumbs up/down” reviews of AI outputs directly as user satisfaction to improve models.

Financial ROI and Business Impact

Any AI program must eventually be related to the bottom line. The Return on Investment (ROI) is one of the top-level KPIs that summarizes the value of the delivered (revenue generated, costs saved, or productivity increased) to the total cost of deploying and operating the AI. ROI is always one of the most common KPIs of AI projects mentioned by business leaders since it includes adoption and impact in monetary terms. You may monitor cost-related metrics for input to ROI calculations, like the cost of training or fine-tuning AI modelsand the cost of continuous operations. On the value side, AI-driven feature revenue uplift (e.g., maximized sales due to a recommendation engine) or cost avoidance (e.g., cost savings due to automating paid human labor). According to one of the MIT researchers, the productivity benefits are tremendous, but the cost savings are the real KPI that will demonstrate the value of AI on financial terms. In brief, demonstrate that AI is either earning money or saving money, ideally both.

Reliability and Ethical Metrics

The latest addition to AI KPIs is trust and responsibility. Reliability KPIs guarantee that the AI system will always be accessible and operating within the pre-agreed boundaries (e.g., the percentage of uptime of an AI service, the percentage of erroneous responses). In mission-critical applications, the AI must be tracked to meet required service levels to allow user confidence. Similarly, you may track compliance measures where you operate in regulated settings (e.g., % of AI decisions passed fairness tests or number of instances of AI output that needed manual adjustment). Although they are more operational, they are strategically important: a low-reliability or ethical failure will be enough to cripple an AI rollout. According to one AI strategist, reliability, such as predictable reaction to familiar prompts, is a common chief KPI of GenAI, which points to the significance of trustworthiness in AI behavior.

Based on these KPIs, organizations ensure that their AI and generative AI initiatives are both new and demonstrably successful. The above metrics hold the teams accountable regarding user acceptance, cost-effectiveness, and alignment to business objectives. Then, we move to another fast-developing area, edge computing, and KPIs that will measure its success.

For additional insights into digital transformation with AI, read Customer Portals & Mobile Apps: Driving Growth for DTC Health Brands.

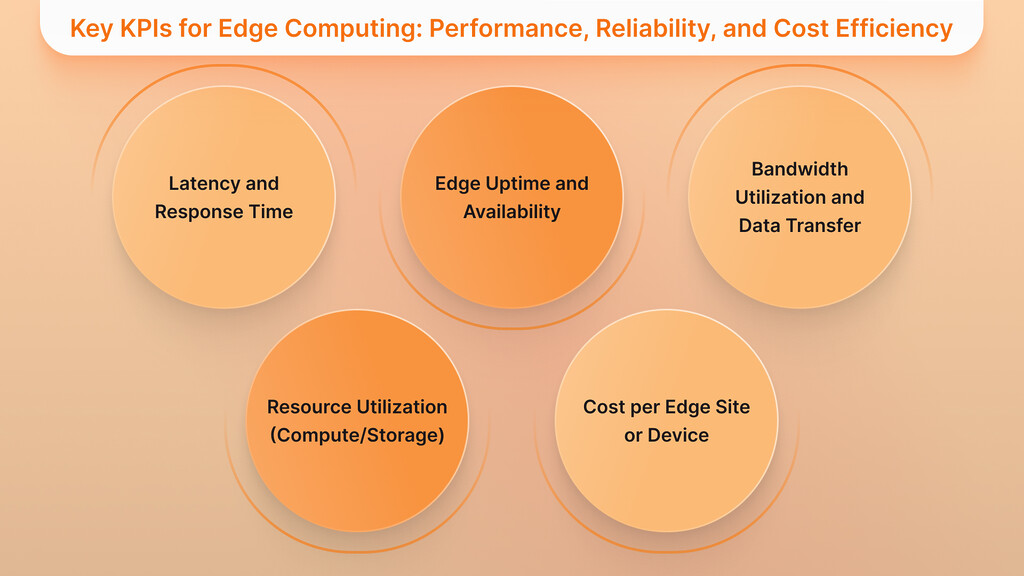

Key KPIs for Edge Computing: Performance, Reliability, and Cost Efficiency

The edge computing moves data processing and storage physically closer to the point where data is produced (devices, sensors, branch locations) to minimize latency and bandwidth consumption. The strategic potential of edge computing is better real-time response, reduced bandwidth expenses in the cloud, and resilience. To assess whether an edge computing project is fulfilling these promises, technology executives ought to keep an eye on a list of usable KPIs:

Latency and Response Time

Reduced latency is one of the most evident advantages of edge computing. Measure the average latency of latency-sensitive tasks after they have been shifted to the edge (e.g. the time to read a sensor reading or present a user response). This is compared to the prior baseline latency of all things being in a central cloud. A drastic reduction in latency means success. As an example, if a user request that took 200ms over the cloud now takes 20ms at the edge, that performance gain should be measured and published. The performance in real-time can be a selling point of edge deployments, so be sure to measure it. If the latency is not decreasing, it can indicate a problem with edge network setup or an underpowered edge node.

Edge Uptime and Availability

Availability is a significant KPI because an edge site or device may be distant and may experience outages. The percentage of time available for each edge location or device (or site availability over time). High availability implies that your edge infrastructure is not going to fail; downtimes can instantly translate into lost revenue (e.g., an offline edge server in a retail store may stop point-of-sale systems). Availability monitoring can also detect any weak links (maybe power or connectivity problems) that can be fixed, e.g., increase the number of redundant power supplies in the case of a downtime at an edge site affecting operations. Most organizations strive to achieve five nines (99.999%) availability on their critical infrastructure. However, this may be a stretch at the edge; monitoring this indicator keeps reliability at the top of the list.

Bandwidth Utilization and Data Transfer

Edge computing is intended to minimize the raw data transmitted on a network to a central cloud or data center. Network KPIs include bandwidth load, where the amount of data traffic going in and out, between edge and cloud, is measured. When your edge strategy works, you may experience a reduction in WAN bandwidth (data is filtered or processed locally) or at least a flat line as data volumes increase. This may be measured in terms of saving costs, whereby, for example, less cloud egress bandwidth may be required. Also, the number of data processed at the edge vs. centrally should be monitored. The more data is processed locally, the better edge resources are used. Latency and bandwidth measurements provide a view of network efficiency. When bandwidth peaks are high and latency is rising, it may be a sign that the network is overloaded and upgrades or additional edge computation are required.

Resource Utilization (Compute/Storage)

Every edge node is of finite computational capacity and memory. Monitoring the CPU and memory usage at the edge nodes will help you avoid overloading the local hardware or exceeding the idle capacity. One related KPI is storage utilization: what percentage of the local storage is used to cache or process data locally? These metrics are used in capacity planning: maybe an edge device is always at 90% of CPU and storage, and one should start scaling hardware or optimizing the software. On the other hand, extreme underutilization might indicate that you assigned more than you should have. The objective is to achieve the optimal balance in which the edge nodes should be utilized effectively and not burdened. Application-specific performance is measurable through application-level metrics at the edge (e.g., frame rates of an AI camera system). Make sure these technical KPIs are connected to business requirements, e.g., if an AI vision system is running on a factory floor edge server, make sure its processing speed (frames per second) is up to the business needs of controlling production quality.

Custom Software Development for a Competitive Edge

Build Unique Software Solutions with Our Expertise

Explore Custom SoftwareCost per Edge Site or Device

Like with AI, cost–efficiency is essential to edge initiatives. Determine the price per edge deployment (e.g. hardware, operations and connectivity) and weigh against the benefit it offers. Important KPIs may be cost savings due to less cloud consumption (did doing analytics on-site save 20% on your cloud bill?), or revenue averted by edge uptime (did preventing downtime at a store avoid X lost sales?). Other institutions combine them into an ROI for edge, such as recording the speed at which an edge investment returns, in the form of savings on operations. Moreover, the KPIs of scalability, including the number of edge nodes deployed or how many operations are currently performed at the edge rather than in the cloud, can also reflect the progress of the entire edge plan. When 5% of the data was first processed at the edge, and today it is 50%, that demonstrates success and reveals more about a fully integrated edge.

Security and Compliance Metrics

Security KPIs may be significant when edge computing is associated with distributed information beyond centralized control. Measure the security incidents or breaches at the edge locations, or the percentage of devices at the edge that are up to date in their security updates. Hopefully, these figures will remain low, but they are good KPIs to indicate that security is being watched. You may also quantify complianceadherence at the edge (e.g., retaining edge data in a GDPR-compliant manner, where applicable). Security metrics are there to remind you that edge deployments are not to be the Achilles’ heel of your enterprise risk posture. They are also strategically important, as the security failure on the edge would offset all the performance advantage by creating business risk.

Organizations can measure edge computing based on business terms by monitoring performance, reliability, utilization, and cost KPIs. For example, better latency and uptime on retail branch servers may be mapped to increased customer satisfaction and sales, and thus, an aspect of technology becomes a business narrative. Edge KPIs can, therefore, guarantee that the advantages of moving computing capacity nearer to end users are indeed paying the dividends they are supposed to (faster services, cheaper costs, higher resilience).

To better understand infrastructure trade-offs, see Edge Computing vs. Cloud Computing: Choosing the Right IT Infrastructure for Your Business.

Key KPIs for Quantum Computing: Tracking Progress and Value in a Nascent Technology

Quantum computing is the ideal example of an emerging technology that is not yet fully developed but can have a potentially revolutionary effect. In most organizations, quantum computing is still in the research or pilot stage instead of full production. This implies that the KPIs of quantum projects tend to be oriented toward progress, capability, and strategic readiness, but not the more traditional uptime or revenue KPI. The key KPIs in assessing quantum computing projects are:

In short, the key performance indicators of quantum computing are about quantifying small steps and matching them to business value. Although the revenue or ROI may not be immediate, you can demonstrate value by measuring metrics on better solutions, cost trends, and strategic preparedness. Tech executives must share these KPIs to ensure that the stakeholders remain assured that the quantum project is well on track and providing precursors to business value (though payoffs may be several years away). In that way, you keep the supporters and the funding around, the equivalent of a moonshot, but it is done metric-driven.

Implementing and Optimizing KPI Tracking for Emerging Tech

Determining the correct KPIs is not the complete game; the next part is putting in place the mechanisms that will help measure, monitor, and take action on the KPIs. The following are some steps of action that organizations can take to implement KPI-based management of AI and emerging technology-based projects:

Align KPIs with Strategic Goals

Begin by having a clear relationship between every KPI and a business objective. Ask the question, “What strategic objective is this metric telling me?” As another example, when aiming to make customer support more efficient, you can establish KPIs such as the AI chatbot containment rate or the reduction of the average handling time. Ensure that each of the KPIs has a purpose that the stakeholders understand. Stay away from vanity measures: go after the ones that show movement towards revenue growth, cost reduction, customer experience enhancements, or leadership on innovation (the stuff that executives are interested in).

Select a Focused Set of KPIs

Trying to monitor every aspect is a trap, and a dashboard with too much information will hide what matters. Maintain a short list of the KPI set, usually not more than 5 major KPIs per initiative, so that it does not overburden the stakeholders. Apply SMART criteria: metrics are to be Specific, Measurable, Attainable, Relevant, and Time-bound. For example, instead of a generic as a whole, it is better to say, “boost the adoption rate of AI tools to 60% of employees by Q4.” When you restrict the number of KPIs and make them SMART, you will surely have focus and clarity in execution.

Invest in Measurement Infrastructure

After setting KPIs, provide your personnel with instruments to measure and report them. This may include putting in place analytics dashboards, instrumenting your applications to capture pertinent information, and putting monitoring systems in place. MLOps platforms can monitor model performance, latency, and drift in real time, in the case of AI projects. In edge computing, centralized management systems can gather uptime and utilization statistics at distributed locations. Streamline the data collection process and make it standardized, thus ensuring that KPI reports are accurate and up-to-date. It is also prudent to provide clear data ownership, where team members are responsible for each KPI, to ensure data quality and offer analysis.

Redefine Your IT Strategy with Our Consultancy

Customized Solutions for Optimal Performance

Discover IT ConsultingReview KPIs Regularly with Stakeholders

Initiate a rhythm (e.g. monthly or quarterly business reviews) during which KPI outcomes are addressed by the tech and business leaders alike. This helps keep everyone in the same place and can help interpret the meaning of the numbers. During a review, keep perspective when a KPI has taken a different direction from what was projected, then explain why. It is also worth remembering that positive change in one area can often influence another (e.g., enhanced AI automation may (temporarily) cause an increase in average handling time as more complicated tasks remain in human hands). Leadership ought to consider KPIs about contexts rather than as isolated entities, and in cases where it is possible to use industry performance markers or past performance trends to measure the performance.

Iteration: Be prepared for iteration

KPIs do not stay the same. Not all the metrics might be applicable as you grow in the projects, and some new ones might come in. This is because it is necessary to have a continuous retrospective mechanism to determine whether the KPIs that you have chosen remain appropriate. As a case in point, once you deploy, we may move away from adopting measures to more advanced values such as customer retention or profit contribution. Do not be afraid to streamline the KPIs to reflect the steps in the project or new strategic intentions. The trick is to keep the metrics you control to get the desired behavior and results.

Connect KPIs with Optimization Activity

Lastly, take the learnt information and use it to make things better. The action plan must be associated with each KPI if it is underperforming. When the model’s accuracy is not within the target, perhaps now is the time to introduce more training data or tune the algorithm. Invest in redundancy or improved monitoring if the edge downtime is a problem. Streamline and assign resources according to the metrics indicated. This is looping back, and KPIs are not only numbers to be implemented, but they become symptoms of ongoing improvement.

These steps by the organizations will make a culture of managing the emerging technologies as crucial as the other main business initiatives. Using KPI tracking becomes a feedback loop in strategy-making and validation of success, and seeking out problems in time to correct them. This way, you eliminate innovation risks: even such ambitious undertakings like technologically adventurous experiments rest on definite signals and are measurable, hence, scalable or correctable according to complex data.

Turning Metrics into Strategic Advantage

When it comes to AI, edge, and quantum, one can be overawed by possibilities. However, the real thought leadership in technology is produced when innovation is coupled with accountability. The above KPIs – on the AI adoption rates and AI-driven ROI, edge latency improvements, and quantum R&D milestones are the means to keep the emerging technology initiatives in touch with business reality. They assist in addressing the most critical C-suite questions: Is this technology making us better, faster, or more competitive? What is the extent of it? Is it worth investing in it?

Through the prism of such useful KPIs and discussing their strategic value, we emphasize one of the major messages that tech executives and product managers should know about: measurement is the navigator of innovation. Having definite KPIs will help you sail in the unknown sea of generative AI models, distributed edge systems, and quantum algorithms, as you will be sure you are on the right path. Furthermore, monitoring these measures conveys a message to the organization that new technologies will not be treated any better or differently in performance evaluation than any other initiative, and this aligns teams to shared goals and language of success.

When you promote AI and other new technologies within your organization, remember that your KPIs are not only numbers on a screen; they are narrators. They narrate the story of progress, value, and learning. Communicate wins (example: announce that your AI implementation has boosted customer satisfaction by 15%, or that edge computing has reduced the cost of data by half) and early warnings using them. By so doing, you convert cold data to knowledge and knowledge to strategic actions.

The way to tame transformative tech is to measure, measure, measure. Establish a definition of success at the outset, stay close to the action with pertinent KPIs, and be in a position to take action on what is learnt. Those companies that do it well will not just deploy AI, edge, and quantum technologies but will have integrated these capabilities into the business value creation fabric. And such is the final KPI of leadership in the era of emerging tech. When you work on the correct KPIs, you will ensure that innovation will not be just a word but a source of impact that will put your organization at the forefront and on the path to success.

Are you ready to convert KPIs to outcomes? Contact us now to create custom software solutions that are measurable, scalable, and future-ready.

Key Takeaways

- AI and new technology KPIs should align with the technical performance and business effects.

- Examples of Generative AI KPIs would be accuracy, hallucination rates, and user adoption.

- KPIs of edge computing focus on latency, reliability, and performance.

- Quantum computing KPIs are based on developing capabilities, integration, and organizational preparedness.

- It must be implemented through the right tools, cross-functional input, and iteration.

- Measuring success in generative AI, edge, and quantum isn’t just possible—it’s essential.

Why are KPIs important for AI and emerging technologies?

KPIs are crucial because they help close the gap between technical experimentation and real businesses. KPIs are useful in emerging technology spaces such as AI, edge computing, and quantum computing, where outcomes are not necessarily obvious or readily apparent. They assist organizations in tracking performance, justifying investment, managing risk, and ensuring team alignment with strategic goals.

What are the most critical KPIs for measuring success in Generative AI?

The hallucination rate (accuracy of AI-generated output), user adoption rate, efficiency improvements (such as time saved or cost per asset), and customer satisfaction are some of the most valuable KPIs. These metrics inform the AI’s performance and whether it is actually creating value in practice.

How do I measure user adoption in a Generative AI system?

The adoption can be quantified based on active user numbers, such as DAUs and MAUs, as well as frequency and quality of use. Such as the number of users who revisit the tool weekly or the frequency of its use in core workflows. The reasons for low or declining adoption could be usability, a lack of trust, or a lack of training that needs to be addressed.

What financial KPIs are used to justify AI investments?

Some critical financial metrics include Return on Investment (ROI), cost-to-serve reduction, revenue growth driven by AI features, and the cost of fulfilling a single task or asset with AI. These demonstrate whether your AI implementation is generating economic value, whether by realizing efficiency or otherwise adding to growth.

Can KPIs help improve trust and ethics in AI systems?

Yes. The KPIs related to trust, including uptime, error rate, fairness testing pass rate, and the number of manually corrected outputs, are the ones organizations should use to assess the system’s reliability and ethical conduct. The metrics are critical in a more controlled industry or customer-facing usage where errors may threaten confidence.

What are the top KPIs for edge computing success?

The edge computing KPIs must cover latency reduction (i.e., request/response time), device or site uptime, bandwidth per edge node, cost per edge node, and local data processing. Collectively, these metrics capture whether your edge strategy is enhancing performance while minimizing operational overhead.

How do I know if my edge computing solution is performing well?

Compare the present measurements to your pre-deployment standards. Prosperity can manifest as reduced latency, high availability, greater data locality, and more efficient hardware utilization. If you are still experiencing cloud-like lag times or system bottlenecks, your edge deployment may need to be optimized or scaled.

Which security KPIs are relevant for edge deployments?

Notable security KPIs are breaches at the edges, patch/update policies, and regulatory requirements (e.g., GDPR of local data). These help ensure that, as you decentralize processing, you do not expose your business to unnecessary security risks.

How do KPIs for quantum computing differ from those of other technologies?

Quantum KPIs are not much concerned with short-term performance but rather long-term preparedness. Metrics such as quantum volume, qubit fidelity, algorithm test success rate, and the training level of quantum-literate staff show improvement. These KPIs are therefore focused on capability building and ecosystem maturity, as most quantum applications remain experimental.

What are some examples of business-aligned AI KPIs?

They can include Customer Satisfaction Scores (CSAT), Net Promoter Score (NPS) for AI interactions, cost savings per automated task, increased employee productivity, and the sales conversion rate following AI adoption. Such KPIs are aligned with the main business priorities, such as service escalation, time savings, and revenue.

How many KPIs should I track per initiative?

Ideally, have no more than 3-5 SMART KPIs per project to avoid analysis paralysis. Every KPI must have a specific owner, a goal value, and a specific influence on business results. It is always possible to add more metrics later if a deeper analysis is needed.

What tools can help track KPIs for emerging tech?

For AI projects, MLOps frameworks such as MLflow or Weights & Biases are the right solutions for monitoring model metrics. Edge deployments improve infrastructure monitoring tools such as Datadog, Prometheus, and custom dashboards. In the case of quantum, test environments and simulators are useful for benchmarking progress. Select the tools that will provide real-time visibility and historical trends.

How often should KPIs be reviewed?

Reviews of KPIs should be done frequently —monthly, quarterly, or at the pace of your project. Such reviews help identify poor performance early, reward achievements, and keep KPIs aligned with changing business objectives. The combination of tech and business teams is important to bring to the table to become cross-functional.

Should KPIs evolve over time?

Absolutely. KPI priorities vary with project maturity. Early AI pilots can focus on model accuracy and adoption, whereas subsequent stages can focus on ROI and customer retention. Be nimble, periodically review, and reset your KPIs to new goals or knowledge.

How do I align KPIs with business strategy?

You can begin by deciding what business outcome you are trying to achieve —cost reduction, better experience, faster time to market, etc. —and selecting KPIs that directly align with your objective. For example, when you plan to expand digital customer care, a relevant KPI is chatbot resolution rate.