Intelligent Document Processing (IDP) refers to a class of solutions that automate the reading and processing of documents using AI technologies. Unlike traditional Optical Character Recognition (OCR), which only extracts text, IDP integrates OCR with artificial intelligence and machine learning to extract, understand, and organize data from documents in various formats. This means an IDP system can recognize text in a document while also interpreting its context and meaning. The result is unstructured data (such as scanned forms, invoices, PDFs, images, and emails) transformed into structured, actionable information with minimal human intervention.

Experience Our AI Development Excellence

Empower Your Operations with Intelligent AI Integration

Access AI Integration SolutionsImportance of IDP for Businesses

Organizations throughout the modern world manage vast volumes of data hidden in documents such as invoices, receipts, legal contracts, medical records, and many other types. Hand processing of documents leads to slow work, numerous errors, and high costs. The combination of traditional methods results in time consumption for employees, along with restrictive templates and rules that struggle with diverse inputs. Automating document processing helps expedite business processes while simultaneously reducing the expenses associated with document workflow management. The automated system from IDP accelerates response times and enhances accuracy, allowing workers to focus their efforts on more valuable tasks rather than routine data entry.

This whitepaper details comprehensive IDP solutions on Amazon Web Services (AWS) that implement event-driven architecture using Node.js programming for custom logic. This section will explain the business problems IDP resolves and the organizational benefits it provides. Additionally, this section highlights the fundamental AWS technologies and services that create an IDP solution, detailing the roles of AWS Lambda, Amazon S3, Amazon Textract, Amazon Comprehend, Amazon SNS, Amazon SQS, and AWS Step Functions. The paper also discusses how a serverless and event-driven strategy yields optimal outcomes.

The reference architecture illustrates how the system components work together, offering optional Large Language Model (LLM) integration options, including AWS Bedrock, Anthropic Claude, and OpenAI’s ChatGPT for enhanced document understanding. Furthermore, the discussion includes real-world examples of industrial applications in transportation and medicine, showcasing practical implementations. It will conclude with a series of next steps for business and technology leaders to initiate IDP implementation.

Business Challenges & Benefits of IDP

Manual Document Processing Challenges

Businesses encounter various challenges when their staff manually handles large volumes of documents. Human operators spend many hours reading and extracting information from PDFs and forms, thus slowing down subsequent workflow processes. The human response leads to multiple errors in manual tasks, as workers may accidentally type incorrectly or skip important fields, resulting in inaccurate information. The annual handling of billions of documents- including invoices, bills of lading, and medical claims- within industries such as logistics and healthcare becomes costly and error-prone when humans attempt to complete the processing. The text capture capabilities of traditional OCR software can be enhanced through document layout templates, but the system still requires manual verification to accurately interpret content. Handled documents increase compliance risks, as monitoring access to information becomes challenging and exposes sensitive data to potential mishandling. The inconsistent implementation by different staff members yields varied outcomes because they apply different procedures for document processing. Organizations relying solely on human workers for document handling struggle to process increasing amounts of documents, as this approach limits growth to merely hiring more staff, whose recruitment and training may not align with business expansion needs.

Benefits of Intelligent Document Processing

Reduced Manual Effort & Higher Efficiency

The document data extraction solution IDP executes time-consuming document data retrieval procedures to minimize processing hours. Manual document operations that previously required several hours now take only minutes or even seconds to complete. By simplifying workflows, data entry duties shift toward higher-value tasks that employees can now perform. Organizations that implement machine learning in their document processing experience efficiency gains reaching 30% and above. The instant processing of forms accelerates operations, leading to shorter wait times, such as immediate customer onboarding instead of waiting days.

Begin Your Digital Transformation Journey

Customized Strategies to Lead Your Business into the Digital Age

Explore Digital TransformationImproved Accuracy & Compliance

Implementing AI through Intelligent Document Processing (IDP) significantly reduces the number of mistakes made by human operators. IDP technology has demonstrated an ability to minimize data extraction errors by 80–90% by ensuring the capture of precise and standardized information. Reliable data transmission to downstream systems becomes feasible as the number of errors decreases, consequently minimizing rework requirements. The accuracy levels of IDP support compliance through automated rule systems that validate essential fields and identify abnormal data entries. The system logs document handling activities, revealing the identity of processors and machines along with timestamps. IDP offers automated compliance checks and secure data handling, enabling organizations to adhere to GDPR and HIPAA regulations by managing sensitive information safely. Additionally, the IDP system features an automated function that identifies personally identifiable information (PII) in documents, protecting patient and customer privacy through redaction.

Scalability & Speed

IDP solutions function at different capacities compared to manual operations because they can adjust to increasing document volumes. After implementation, the system allows processing for both 100 documents and 100,000 documents through the same automated workflow, with cloud resources scaling according to demand. An organization can grow or manage seasonal peaks with minimal increases in staff numbers. IDP provides scalable technology for large, fast, and accurate data processing operations. A pipeline built on AWS can automatically create additional processing instances (Lambdas) to accommodate an increased workflow while maintaining swift processing speed.

Cost Savings

The reduction of manual work and the elimination of errors enable IDP to generate substantial savings. IDP systems result in operational cost savings of 30–50% because staff members can concentrate on other tasks instead of data entry and correction. Faster processing through IDP delivers financial advantages, including quicker billing cycles and fewer compliance fines due to processing errors. Users who utilize pay-per-use cloud-based solutions through IDP gain greater economic benefits by only paying for AI services and computing power during actual document processing periods. The automation of document processes is not only efficient and cost-effective but also enhances speed.

Better Decision-Making & Productivity

IDP technology enables users to access digital document data more quickly and with greater accuracy. This data availability allows downstream systems and analytics to utilize information immediately, leading to more timely and informed decisions. When invoice data extraction occurs simultaneously with posting operations, finance departments can effectively manage budgets and cash flow with up-to-date information. Employees gain valuable time for analytical work, customer service activities, and other business tasks after being relieved of administrative duties. This approach allows organizations to deploy staff into value-driven roles, enhancing employee satisfaction.

IDP transforms document handling into an optimized automated system that ensures a smooth processing flow. The IDP system tackles all manual handling challenges, including slow speeds, errors, limited scalability, and compliance issues, providing enhanced operational speed and accuracy with unlimited scalability, reduced costs, and improved compliance capabilities. The benefits offered by IDP systems empower business leaders to accelerate operations while simultaneously reducing operating expenses and minimizing operational risks. Implementing IDP allows IT leaders to modernize outdated systems and incorporate intelligent automation into their digital transformation strategy.

Technology Overview

Implementing an Intelligent Document Processing solution requires a combination of technologies that facilitate event-driven processing, AI-powered data extraction, and seamless integration of components. AWS offers a rich ecosystem of managed services that act as the foundational elements for IDP, and these can be integrated using an event-driven, serverless architecture. In this section, we outline the key technology components – focusing on AWS services and the Node.js runtime – that support a robust IDP system.

Event-Driven Architecture with AWS

IDP workflows are inherently event-driven. The system necessitates event-based operation, as documents arrive unexpectedly in varying quantities instead of adhering to a regular schedule. The decoupled nature of components and scalability arises from processing stages that activate due to completed operations and external triggers within an event-driven architecture. AWS offers optimal support for this model, allowing Amazon S3 events to automatically initiate AWS Lambda functions for processing. The Lambda function engages the Textract AI service to extract text, subsequently emitting a completion event that triggers the next processing step. This event-based design establishes a serverless streaming pipeline, preventing any component from remaining idle, as each part operates automatically based on demand and scales according to workload. The system achieves high scalability and cost efficiency since AWS autonomously manages all resources, activating additional functions during busy periods and ceasing functions when the system is idle.

Core AWS Services for IDP

Several AWS managed services are essential for developing IDP solutions:

Amazon S3 (Simple Storage and Intake Service)

The solution utilizes an object storage service that enables scalable storage of document files and intermediary processing results. An IDP context employs S3 as its document intake location, where scanned PDFs or images are uploaded to S3 buckets. S3 offers event notification features, which trigger processing automatically when files arrive. The storage system ensures reliable storage for extracted text, along with JSON results and processed documents. S3 provides robust security and durability features, allowing users to utilize buckets and folders for managing document types and processing states, thereby ensuring precise access control and lifecycle management.

AWS Lambda (Event Driven-Processing)

Serverless compute provides a service that automatically executes code in response to event triggers. Lambda functions serve as essential integration components in an IDP pipeline by launching custom tasks automatically in reaction to S3 events, scheduled triggers, or message-based events. Node.js is an excellent programming language for Lambda functions due to its fast startup time and efficient handling of I/O operations. Our IDP solution relies on Node.js-based Lambda functions, which act as orchestrators for each step of execution, including triggering new document S3 uploads to initiate extraction workloads and processing OCR results before classification tasks. The event-based auto-scaling capability of Lambda allows it to distribute tasks across multiple instances and also terminate instances when no work remains, thereby minimizing costs. AWS SDKs, including the Node.js SDK, provide Lambda functions with easy access to call other AWS services such as Textract and Comprehend and to manage interactions with S3 and databases.

Amazon Textract (OCR and Data Extraction)

AWS provides Textract as its machine learning OCR service, which automatically extracts printed text and handwriting, as well as structured data from documents. Textract offers OCR functionality while also recognizing document composition through its ability to identify tables and form fields, including key-value pairs and checkboxes, which helps maintain the original structure of the extracted data. The IDP pipeline starts with Textract as its initial AI component, converting scanned images or PDFs into text data and machine-readable formats. Textract functions in synchronous mode for rapid processing but operates in asynchronous mode for extensive document needs. The asynchronous API of Textract allows Lambda functions to extract text from multi-page PDFs using Amazon SNS/SQS for callback processing. Textract serves as a fully managed service, eliminating the need for model training since it is pre-trained to process invoices, forms, and IDs, among other documents. The Textract service delivers results in JSON format, which includes word detections with coordinates and structured data outputs (from form and table analysis) that other processing systems can read.

Amazon Comprehend (Natural Language Processing)

Amazon Comprehend functions as a natural language processing (NLP) service that examines text to reveal insights regarding language, sentiment, key phrases, and entities such as people, places, and dates, as well as offering topic modeling capabilities. The text extraction functions of IDP become more effective with Comprehend when obtaining text data from documents. Amazon Comprehend utilizes two key capabilities: document classification, which determines document types, and entity extraction, which identifies names, organizational entities, and monetary figures. AWS has developed Amazon Comprehend Medical as a specialized version of its service for medical text, which extracts health data including medical conditions, medications, procedures, and features for protected health information identification, making it a HIPAA-eligible service. Comprehend includes functionality for detecting PII in text, making it useful for compliance purposes; it automatically locates social security numbers and addresses, providing options for masking or removal. IDP systems often employ Comprehend custom classifiers to organize extracted text from Textract, after which different document processing flows receive documents based on their identified types. The second method involves using Comprehend Named Entity Recognition (NER) to extract specific fields that appear in the text, including invoice numbers, dates, total amounts, patient names, and more.

Amazon SNS (Simple Notification Service)

The pub/sub messaging service operates as an event broadcaster. IDP solutions use SNS as their primary notification system to facilitate process execution across different systems. After completing its asynchronous Textract job, the system sends notifications via an SNS topic. Subscribers connected to that topic can be either SQS queues or Lambda functions, which trigger the next step in the process upon receiving the message. Through SNS services, you can achieve service independence by allowing Textract to announce job completion while SNS distributes the notification to designated recipients. Typically, internal workflow progression with SNS requires SQS to operate effectively, while the system can also utilize SNS to deliver emails and alert notifications as needed.

Amazon SQS (Simple Queue Service)

This managed queue system works in conjunction with SNS and Lambda to facilitate the reliable transfer of tasks between components. SQS maintains message queues without data loss, ensuring that processing steps complete their work before downstream components can process the information. SQS ensures component decoupling, which enhances resilience as messages remain in the queue during the temporary unavailability of downstream services. Our system implements SQS, exemplified by Textract completion notifications (received via the SNS->SQS subscription) that wait to be retrieved by a Lambda function one at a time. A controlled processing rate is achievable for our Lambda because documents that are waiting for OCR and finish simultaneously are queued in SQS. The SQS system acts as a holding area for documents that require specific processing steps, such as classification jobs. This arrangement prevents any service from becoming overloaded. The SQS service is a crucial element for establishing an event-driven workflow and increases the system’s robustness and scalability.

AWS Step Functions (Workflow Automation)

A serverless workflow orchestration service that coordinates sequences of AWS Lambda functions and other services into a visual state machine. Step Functions is particularly useful for managing multi-step processes with branching, parallel tasks, retries, and timeouts – all through configuration rather than manual coding. In an IDP solution, Step Functions can orchestrate the end-to-end workflow of document processing.

For instance, instead of linking Lambdas via events alone, you might have a Step Function that defines: Step 1: invoke Lambda to start Textract; Step 2: wait for Textract result (perhaps by polling or via callback pattern); Step 3: invoke Lambda to classify the text; Step 4: branch – if classification = Invoice, do X, if = Contract, do Y; Step 5: for each branch, invoke respective extraction/enrichment tasks; Step 6: aggregate results and invoke a validation step; etc.

Using Step Functions simplifies orchestration logic by providing a central definition of the workflow and built-in error handling. It also enhances the process’s resilience, as failed steps can be automatically retried or exceptions caught, and it facilitates easier monitoring through its execution history. In a logistics use case we’ll explore, Step Functions coordinated document checks, extraction, and human review, improving resiliency with less custom code. While Step Functions is optional (you can chain events without it), it becomes increasingly valuable as the complexity of the workflow increases or when long-running jobs and conditional logic are involved.

Programming Language Choice: Node.js for IDP (JavaScript Runtime)

We have selected Node.js as our programming language for custom IDP logic implementation inside AWS Lambda. The main factors supporting Node.js selection are:

- Event-driven async behavior and its non-blocking I/O model. The Node programming language enables AWS service calls to run efficiently through async/await and promises without blocking execution to achieve high throughput.

- Rich Ecosystem: The Node.js ecosystem, through npm, provides developers with numerous libraries that enable fast development for data parsing, API calling, and image manipulation tasks. AWS strongly supports the AWS JavaScript SDK version 3 to simplify connections between Node code and AWS services.

- Fast Deployment and Startup: Node.js lambdas establish cold-start times in the range of hundreds of milliseconds, maintaining low latency in event-driven systems.

- Unified Language Stack: The adoption of Node.js on back-ends enables a single language to run from front-end to back-end throughout the stack. Team development benefits from this approach because it allows sharing utility code while using common linters and tools. CTOs and tech leads should consider the talent pool, as JavaScript is one of the most sought-after programming languages, making hiring developers who can maintain or create Node.js-based IDP solutions straightforward. AWS Lambda allows developers to choose from multiple languages, but this whitepaper demonstrates Node.js as the optimal option for building IDP logic.

The modular framework achieves its functionality through AWS services and technologies that independently manage individual components for activities such as storage, OCR, NLP, and messaging tasks. Our workflow operates in a serverless manner, as the Lambda, S3, SNS, SQS, and Step Functions services require no server maintenance from us – we simply set up configurations and deploy Lambda code. The ready-made benefits of scalability, high availability, and decreased operational costs come from these services. The following section details how separate components function as a reference architecture for IDP with event-driven connectivity and optional large language model integration.

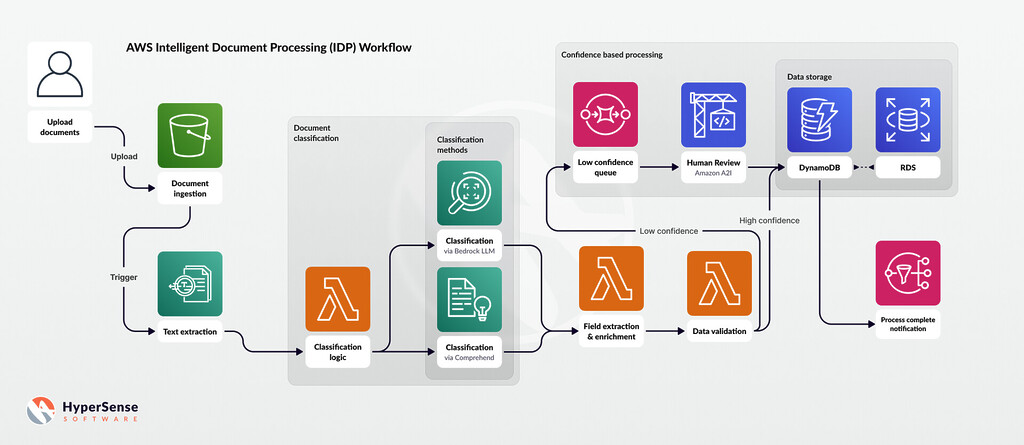

Reference Architecture for IDP

To understand how an AWS-based IDP solution works concretely, let’s walk through a high-level system design that ties together the services discussed. The architecture diagram below illustrates an end-to-end intelligent document processing pipeline using AWS. It covers document ingestion, text extraction, classification, data enrichment, validation, and storage, with points for integrating LLMs (large language models) and human review where appropriate.

Step-by-Step Workflow

The IDP reference architecture can be broken down into a sequence of event-driven steps:

Document Ingestion

The document receipt marks the starting point of the process. Users who upload files through web interfaces, send email attachments with documents, and upload file batches through FTP/SFTP servers initiate the process. Our AWS-centric design stores all supported document formats, such as PDF, TIFF, JPEG, and others, in an “Input Documents S3 bucket” in Amazon S3. The S3 system produces an event notification for every document that users upload. The S3 bucket can trigger notification events sent to AWS Lambda functions when a file named “invoice_123.pdf” is added to the bucket. The event delivers essential information about the file, including the bucket name and object key. S3 operates as the intake layer to establish a secure, durable repository that ensures processing independence by accepting documents from any source in the S3 bucket.

OCR/Text Extraction

When documents arrive at S3, the system activates the Node.js-based Lambda function, which can be named DocumentTextExtractionFunction. The primary role of this Lambda function involves starting text extraction processes for new documents. The Lambda function uses Amazon Textract to process OCR on the S3 stored file. When processing single-page PDFs or images, Textract allows Lambda functions to access its DetectDocumentText or AnalyzeDocument APIs synchronously to obtain results within a single execution period. The StartDocumentTextDetection and StartDocumentAnalysis operations improve efficiency when processing longer or multiple-page documents through Amazon Textract. The Lambda function makes an initial Textract processing request for the document. Still, Textract requires time to complete the work, resulting in a published notification to the specified SNS topic. The Lambda function completes its execution at this time because Textract continues working in parallel.

Custom Software Perfectly Aligned with Your Strategic Objectives

Software Solutions that Fit and Enhance Your Business Strategy

Explore Custom SoftwareThe results from Textract are provided through S3 storage (text JSON can be written to a designated S3 location), or the results can be retrieved through a Get API. Our architecture utilizes an SNS topic, possibly named TextractJobComplete for Textract, to provide job completion announcements. An SQS queue receives messages from the SNS topic for reliable delivery purposes. The SNS message containing the job ID and status gets sent by Textract after the “invoice_123.pdf” processing is complete and ends up as a new message in the SQS queue. The queue maintains safe storage for messages, which prevents them from vanishing if downstream processes are occupied.

Post-OCR Processing & Classification

DocumentClassificationFunction is another AWS Lambda function that listens to SQS queue events to trigger automatic execution for new messages received. This Lambda function becomes active after the Textract completion message reaches the queue to read the message content and retrieve job details and document identifiers before retrieving the Textract result. The Lambda processor can retrieve the text blocks by using Textract’s GetDocumentTextDetection API or by examining S3 data that Textract potentially saved when running asynchronous tasks. The Textract service extracted raw text contents and possible structured data types from the processed document.

The DocumentClassificationFunction operates as the current step for document categorization. The system seeks to identify the document type (for multiple document types) or establish classifications that support downstream routing. The approach contains two methods that do not conflict with one another.

- Using Amazon Comprehend: If we have predefined classes (e.g., classifying documents as “Invoice”, “Purchase Order“, “Contract“, “Miscellaneous“, etc.), we can train a custom classifier in Amazon Comprehend using example documents. Once trained, Lambda can call Comprehend’s ClassifyDocument(for a custom classifier) or utilize Comprehend’s built-in features if the classification relies on entities or keywords. Comprehend will return a label and a confidence score for the document type. This classical ML approach works well when you have sufficient training data for each document category and the categories are established in advance.

- Using an LLM via AWS Bedrock: Alternatively, we can leverage a Large Language Model (LLM) for classification without explicit prior training on a dataset, by providing prompts. AWS Bedrock is a service that allows access to various foundation models (like Anthropic Claude, Amazon Titan, etc.) through an API. Our Lambda could construct a prompt that includes a snippet of the document text (or a summary) and ask the LLM, for example: “The following text is from a document. Possible document types include: Invoice, Purchase Order, Contract, Others. Determine which type best fits.” With few-shot prompting (providing a couple of examples in the prompt), LLMs can be very effective at classification. The Lambda would call Bedrock (which routes the prompt to an LLM, such as Claude) and receive the model’s answer regarding the class. This approach is flexible and doesn’t require a formal training process on our end, though it relies on the power of the pretrained model and good prompt engineering.

A hybrid approach works in most cases, where Comprehend should be used first, followed by an LLM for complex situations, if necessary. Our assembly line includes Bedrock for classifying documents, as we want to demonstrate LLM effectiveness. The Lambda utilizes Bedrock by sending the document text and providing classification instructions. The system replies with either the “Invoice” classification label and an explanation or simply shows the appropriate label. At this point, our document has an established classification. The Lambda system retains the provided classification information once it receives it. The Lambda function adds information to database entries or creates two JSON files to store “classified documents” for follow-up steps. Our architectural solution allows Lambda to save OCR text data and classification outputs to S3 storage. The transferred information facilitates the transition to the next stage.

Routing & Document-Specific Processing

Once classified, the document may need to be processed differently depending on its type. We introduce another Lambda (or potentially a Step Function workflow) triggered when a new “classified document” file appears in the S3 bucket from the previous step. Suppose the classification Lambda saved a JSON to classified-docs/Invoice/invoice_123.json (i.e., prefixed by the class). An S3 event on that location can trigger a DocumentProcessingFunction. This function will read the classification and text and then branch logic based on the document type. For example:

- If the document is an Invoice: The function might call Textract’s specialized API AnalyzeExpense (designed to extract fields from invoices and receipts) to get structured invoice data like vendor name, invoice number, line item details, totals, etc. Alternatively, it could use the text from earlier and apply some regex or simple rules for specific fields, but Textract’s invoice capability or Comprehend custom entity recognition is more robust. The function could also be called Comprehend, which detects entities such as dates, amounts, and organizations in the text to cross-verify with Textract’s output. Combining Textract’s form analysis and Comprehend’s entity extraction can yield a structured JSON of all key invoice information.

- If the document is a Bill of Lading or Shipping document: The function might similarly extract key fields like shipment ID, sender, receiver addresses, contents, weights, etc., using Textract (if the layout is form-based) or perhaps an LLM to parse a semi-structured text block.

- If the document is a Patient Record or Medical form (for a healthcare scenario): The function could call Amazon Comprehend Medical to extract medical terms, prescription details, or diagnoses from the text. Comprehend Medical would return medically relevant entities (e.g., medical conditions, medications, test results) and identify PHI (personal health info) to be careful with. For free-form doctor notes, one might also invoke an LLM here to summarize the patient’s visit or to normalize terms (e.g., map “blood sugar is high” to a standardized observation).

- If the document is a Contract: The function might call an LLM via Bedrock to extract key clauses or summarize the contract in a few bullet points (since contracts are mostly free text). It could also use Comprehend to detect entities like dates (for expiration), parties involved, etc.

This step serves as an essential data retrieval and enhancement process that caters to specific types of documents. The process requires additional Textract calls to capture missing details, Comprehend or Comprehend Medical NLP analysis, and LLM calls for document summarization and question-answering capabilities. The Step Functions orchestrate this stage when multiple sub-steps need execution within certain AWS architectures. According to AWS Solutions guidance, content normalization and enrichment necessitate the use of Amazon Bedrock, which enables LLM operations for standardizing data presentation and filling in implicit information (for example, calculating the “Length of hospital stay” by deducing it from admission and discharge dates).

Streamlining Your Path to Effective Product Discovery

Make Your Ideas a Reality in Four Weeks with Our Results-Driven TechBoost Program

See Product Discovery ServicesThe document usually produces structured and enriched data upon completing the processing. The function saves the analysis output, which may include JSON files containing extracted field data and their corresponding values, along with annotated documents. In accordance with our design framework, we transfer the enriched data and all generated outputs into the “Processed Data S3 bucket. ” AI conducts its complete analysis on the document before the current stage.

Validation & Quality Control

Automation is powerful, but in many business scenarios, we need to ensure that the extracted data is correct and complete. This is where a validation and review step comes into play. After processing the document data, a final Lambda function (e.g., ValidationAndRoutingFunction) is invoked, either directly through the code after processing or by another S3 event when the processed data file is saved. This function executes business rules and confidence checks on the extracted data.

Examples of validation rules:

- Required fields check: e.g. if an invoice should have an Invoice Number, Date, and Total, are those present in the extracted data?

- Consistency check: For example, do the line item sums add up to the total? If a document says “see attached”, but no attachment info was captured, flag it.

- Confidence thresholds: the pipeline can track confidence scores (Textract provides confidence per extracted element, Comprehend provides confidence per entity or classification, LLMs might give a score, or we might treat them differently). If any critical field was extracted with low confidence (or not at all), we might consider the output not fully trustworthy.

- Schema compliance: ensure the output data matches the expected schema or data types (numbers are numbers, dates are dates in the correct format, etc.).

In such circumstances, the function activates its capabilities to mark documents as processed with full verification status. The system must trigger human review procedures when problems arise, such as total value parsing errors or when Textract exhibits weak confidence in handwritten entries. To facilitate human review of documents, you can utilize the Amazon A2I service, which allows for sending documents alongside extracted data to human reviewers for a loop-based process.

The Lambda function employs Amazon A2I’s automated workflow programming to initiate human review tasks. A2I establishes work assignments for either internal staff or external workers, through which they review both the original document and the AI algorithm results before validating or adjusting the recorded data. The human operator receives scanned prescriptions containing medication names with low confidence to make necessary corrections if the AI misreads them. The A2I system receives data corrections, which it routes into S3 (the validated database). The Lambda function gets a notification upon completion of human reviews, enabling it to perform data validation reruns or instant approval of human-inputted values.

By adopting a human-in-the-loop approach, users can continuously improve processes because the corrected data serves as a foundation for model retraining and prompts optimization. This ultimately enhances the AI system and reduces the need for human supervision.

Storage of Results & Integration

Data becomes ready for use when validation completes, and a human review occurs if necessary. The final step involves saving the data to storage or delivering it to additional systems. The AWS reference architecture maintains extracted and validated data by placing it in an Amazon DynamoDB table. Users should choose DynamoDB (a NoSQL database) for semi-structured data and diverse document types, as it allows for quick database lookups using invoice_id keys and attributes containing all extracted fields. Entity-relation data requirements can be fulfilled by either Amazon RDS or Amazon Aurora, which provide relational database capabilities to create structured data stores. DynamoDB is most effective when flexibility and high scale are required, whereas strict relational requirements are better suited for RDS.

After database insertion, the stored data becomes available for application use. An Accounts Payable system can access new invoices stored in DynamoDB to perform automatic entry into the accounting database. The extracted visit summary data can automatically enter the patient’s electronic health record through the medical record system after extraction. The pipeline implements a notification system that delivers alerts via the Amazon SNS service or emails following the successful processing of document X (or if human input is needed). The IDP pipeline launches the next sequence of business operations if it operates within an extended business system. The system activates the subsequent part of the process, such as an approval workflow and payment scheduling, once the invoice data storage is complete.

Security with compliance was integrated into this design structure through every component layer (additional information follows in the next section). S3 buckets can be enforced with encryption and access controls. In contrast, data transport encryption exists between AWS services in addition to the implementation of IAM roles, which grant Lambda functions precise resource access permissions (under the principle of least privilege). The HIPAA eligibility and SOC/ISO/PCI compliance status of AWS services Textract and Comprehend makes them suitable for processing sensitive healthcare or financial data in secure ways. The logging functions are enabled through AWS CloudTrail and CloudWatch Logs to create an audit trail showing users who accessed documents, whether systems processed them automatically, and human reviewer identity and timestamps.

The reference architecture employs a serverless workflow on AWS that triggers AI services, and Lambda functions to process documents upon the arrival of each new file. The system operates asynchronously through a workflow that utilizes S3, SNS, and SQS to ensure reliable task transmission capabilities. A modular framework consisting of phases to ingest, extract, classify, enrich, validate, and store enables seamless integration of novel AI technologies, including LLMs, into the overall system operation. Confidence checks using artificial intelligence lead to human evaluation via A2I to meet quality standards while obtaining acceptance from business operators of the system. The adopted architecture reduces the entire document processing timeline while upholding high accuracy standards and provides adaptable templates for various application scenarios.

Use Cases in Transport & Medical Industries

Intelligent Document Processing is a versatile capability applicable across various industries. To provide a concrete discussion, let’s explore two sectors that typically handle extensive document workflows—Transportation/Logistics and Healthcare—and examine how IDP can be used to address specific challenges in each.

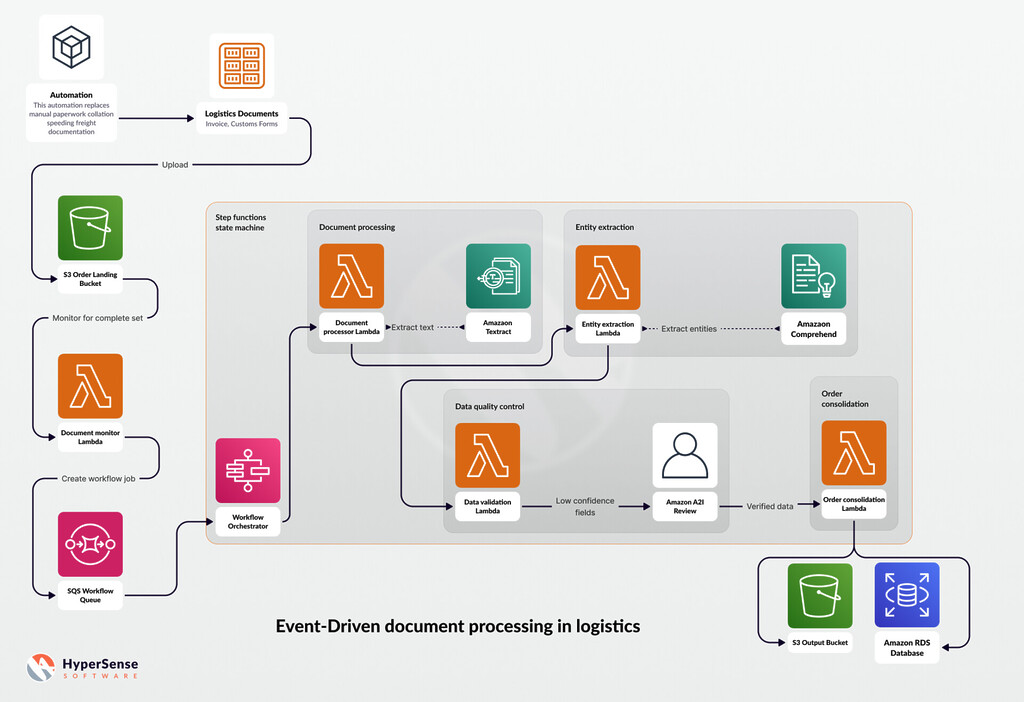

Transport Industry: Automating Invoices and Bills of Lading

Modern logistics activities heavily depend on writing-related documents such as shipping manifests, bills of lading, invoices for freight, customs documents, and delivery receipts. A global supply chain process manages approximately tens of billions of these documents (especially within ocean freight forwarding operations). Data entry occurs through manual staff operations in trucking back offices, where employees input information from documents into company systems; this includes accounting software tasks and bill-of-lading verification. Manual document handling techniques proceed slowly with numerous errors that result in shipment delays and incorrect invoices. This process requires significant worker expenditures and struggles to adjust effectively to increased shipping activity.

AWS enables the implementation of IDP solutions that automatically process transportation and logistics documents, achieving highly accurate and rapid document processing. We can illustrate this point with examples of two widely used shipping documents: the Invoice, which moves between carriers and clients, and the Bill of Lading (BOL), which officially records transported items.

Invoices and BOLs Workflow

The logistics company processes invoices and BOL scans received via email or upload functions. The information documents sent to IDP trigger an S3 bucket activation that starts the automated pipeline sequence. The system uses Textract to extract all textual content found within the invoice and accompanying BOL. The invoice fields include the invoice number, date, vendor information, recipient details, line items (description of charges), subtotal, taxes, and total due, among others. Form extraction through Textract enables the automatic extraction of these specific invoice fields.

A Bill of Lading contains all the necessary information about shipper and consignee details, origins and destinations, carrier names, and goods with weights or quantities, along with reference numbers, including booking and container numbers. Textract is capable of capturing data from semi-structured forms, as BOLs commonly fit this category. A subsequent classification step determines the document type, distinguishing between invoice and BOL processing based on data entry methods.

Entity extraction and validation procedures take place after the pipeline completes its analysis. With Amazon Comprehend, the system extracts entities such as dates, addresses, and company names while detecting personal information (like driver information on BOLs) through its analytical capabilities. The extracted metadata facilitates the verification of all identified fields. The procedure checks if the Purchase Order number on the invoice matches existing system references and confirms that the BOL destination country aligns with shipping regulations. A set of business rules ensures the mathematical correctness of totals and verifies that every necessary field is properly completed (signature on BOLs and tax ID on invoices).

When Textract expresses uncertainty about handwritten gross weights on BOLs, the system sends that specific document for human verification. The transportation clerk reviews an original BOL image displayed alongside the extracted data to make necessary modifications before confirmation. This approach establishes data quality checks for vital fields.

After validation, the extracted data is integrated with back-end systems. The accounting system, together with the ERP, allows invoices to reach them directly, thus removing the need for manual bill entry. The accounts payable and receivable processes become faster because organizations can review and process freight invoices quickly, benefiting from early payment discounts and minimizing late fees. The system enhances cash flow management and reduces errors that result in overpayments by shortening the time needed to detect carrier payments and improving accuracy. The BOL information can synchronize directly with shipment orders in transportation management systems. The BOL data verification checks what was sent against what was documented in the bill and then activates additional clearance procedures, such as scheduling deliveries.

Security and Compliance

The organization experiences improved security standards along with enhanced compliance measures. The company can achieve better control of digital documents and track all important instances thanks to its automation system. Authoritative personnel maintain exclusive access to financial invoice information. Internal compliance management and discrepancy investigations benefit from the audit log system, which documents both BOL processing times and user identities. The digitalization of BOLs enables the storage and analysis of their data to provide operational insights, such as patterns in shipping times and frequent discrepancies for ongoing operational development.

Benefits in Transport

Automating invoice and BOL processing yields very tangible benefits:

- Speed: The process that once required days of physical document collection, double authentication, and data entry has now been reduced to minutes. Companies enjoy faster billing cycles, allowing ample time to process shipments through customs and facilitate contactless document handover, thus minimizing the time goods remain in transit.

- Accuracy: Integrating AI extraction systems and validation protocols achieves near-perfect accuracy rates in data processing. Accurately capturing freight charges and commodity codes during data extraction is essential because IDP ensures that the information originates from the original source, thus preventing customs disputes and payment issues. An entity extraction system derives logistical data elements such as dates, addresses, and totals from documents, achieving high levels of accuracy while flagging questionable entries for verification, thereby significantly reducing critical data errors.

- Cost Savings: The organization achieves cost savings by reducing the need for human labor in document handling. The logistics corporation maintains sufficient operational capacity to handle more shipments without relying on an administrative workforce. To prevent financial losses, this system can detect discrepancies, such as 100 units charged on an invoice compared to 90 units indicated on the BOL.

- Scalability: End-of-month or seasonal peaks may necessitate serverless IDP pipeline scale-up operations, whereas a manual team might encounter resource limitations, leading to additional staff recruitment or performance delays.

- Compliance and Audits: The digital storage system with indexing capabilities keeps track of every processed document. Through its efficient search capabilities, the company can access and display processing records and retrieval times of BOLs and invoices during an audit. The process of retrieving information becomes much faster than traditional cabinet searches. A system of rules can be established to verify operational compliance by confirming the existence of necessary export documentation and validating hazardous material BOL notations.

This business realm includes a freight forwarding company automating its documented procedures. An established AWS architecture utilized an intake bucket that triggered Step Function processes only after receiving all necessary shipment documents (invoice with customs form and additional documents) within that bucket. Data with uncertain extraction quality underwent A2I human review before being saved alongside verified data in an orders database. Trackable shipment information became accessible in real-time, while the shipment processing time was reduced due to this system. This architecture is illustrated in the figure from the original solution (refer to the figure below).

Through this use case, we see IDP acting as a force multiplier in logistics operations—handling the heavy lifting of data extraction and validation and letting humans handle only exceptional cases or oversight. The result is a more efficient supply chain with fewer delays attributable to paperwork.

Medical Industry: Processing Patient Records and Insurance Documents

The healthcare industry contains an overwhelming amount of documents that must be managed. Medical facilities, including hospitals and clinics, and insurance organizations, maintain daily operations through various documentation, including patient admission forms, doctor notes, laboratory results, medication prescriptions, health insurance submissions, explanations of benefits, and numerous other clinical records. Patient medical histories comprise a significant portion of unorganized notes and scanned documents.

Medical staff and coding professionals traditionally spend long hours reviewing various medical documents to obtain essential information for EHRs and claims processing. Female staff members engage in manual documentation that consumes their time and creates potential stress, leading to burnout and reduced patient care time. Medical data transcription errors, along with coding claim mistakes, can lead to claim denials and potentially dangerous effects on patients due to miscommunication of vital information.

Automating Patient Records (Provider Side)

Consider a clinic that sees patients, and each visit generates a few documents: a registration form, the doctor’s handwritten notes or dictated report, perhaps lab results attached, and a discharge summary. An IDP solution can help in several ways:

- Digitizing Clinical Notes: The system uses the Textract interface to extract handwritten transcriptions and data from scanned consultation sheets. Amazon Comprehend Medical analyzes this information to identify medical entities, noting conditions like Hypertension, medications such as Lisinopril, and family histories of Diabetes. A follow-up appointment is scheduled for three months. This analysis also processes protected health information, like patient names and dates of birth, ensuring secure handling. The extracted data automatically populates the clinical documentation system, so providers and scribes do not need to enter this information manually.

- Summarization: An LLM obtained through the Bedrock platform and APIs like ChatGPT can generate a concise version or list of problems from lengthy medical notes that contain extensive medical histories. A 45-year-old male with a history of hypertension reports headaches to the physician. The examination is routine. The plan involves increasing the dosage of Lisinopril along with lifestyle education, followed by three-month follow-ups. Medical staff can use this approach to quickly understand essential patient information stored in their records, enhancing continuous patient care.

- Lab Results and Attachments: The IDP platform can analyze lab reports and medical imaging documents through PDF and attachment processing. The EHR system and alert mechanisms can process specific patient values (such as cholesterol measurements and blood sugar results) for out-of-range monitoring. Automation enables healthcare practitioners to prevent critical data from external documents from slipping through the cracks.

- Compliance and Privacy: Automating record handling methods protects sensitive patient data by limiting the number of healthcare workers accessing such materials. The data extraction system saves relevant information, which is securely stored, while the program eliminates unnecessary identifiers. The digital nature of HIPAA compliance data and timestamping functionality enhance audit tracking as authorized system processes and user interactions with information become transparent to healthcare providers.

The medical use case benefits include faster patient record updating, reduced clerical burden on healthcare staff, and potentially improved quality of care (because information is more readily available and actionable). Clinicians get more time with patients instead of paperwork, and patients benefit from more accurate records and quicker service (e.g., no waiting for someone to file your lab results—the system does it instantly).

Processing Medical Claims and Insurance Documents (Payer Side)

Insurance teams must process both insurance claims and supplementary documentation. The insurance decision on approvals and reimbursements requires insurers to extract submission data from medical claims submitted by providers and patients through claims adjudication. Multiple components include claim forms, doctors’ notes, procedure codes, and various supporting documents. The vast number of healthcare claims submitted in 2018 reached 6.1 billion, making manual handling exceptionally expensive due to the soaring claims volume. The combination of machine learning and IDP techniques helps organizations process a large number of claims while reducing manual operations.

With an IDP pipeline:

- Claim Form Processing: Textract processes claim forms through automatic parsing, which handles standardized formats including CMS-1500 and UB-04, commonly used in the US. Basic data such as patient information, provider identification, procedure codes, and claimed amounts can be accurately extracted through Textract’s structured data extraction method, as it recognizes checkboxes and table structures in standardized formats like CMS-1500 and UB-04.

- Supporting Documents: Through its reading capabilities, Comprehend Medical obtains significant details from hospital documents that support claims. DocxTcret Phar2 verifies the accuracy of the ICD-10 diabetes diagnosis code mentioned in the claim by evaluating medical notes that specifically reference diabetes. The OCR system retrieves the injury date outlined in emergency room reports to confirm it matches the claim information.

- Automated Adjudication Rules: Following data extraction, the system implements a series of automated rules (supported by Lambda or a business rules engine). Insurance validity must be verified first, followed by an examination of claim duplicates. Then, the system will check if pre-authorization requirements are met. Many basic claims receive automated approval directly through straight-through processing when the data passes all validation checks. The algorithms obtain data swiftly through the IDP system.

- Human Review for Complex Cases: A human claims adjuster reviews complex cases along with all claims that do not meet standard checks for information or medical necessity evaluation. The reviewed information extracted is sent to human adjusters along with their assessment of the original documentation. Implementing IDP allows adjusters to make quicker decisions, as the NLP system highlights key information in doctor-written statements. Adjusters may benefit from an LLM that provides summary statements about cases or reports of suspicious details.

Insurance companies accelerate their claims processing times while maintaining uniformity through the automation of many administrative tasks. The combined cost-saving measures and improved provider satisfaction arise from quicker payments and reduced denial rates. The system assists patients in obtaining swift resolutions of their claims with fewer administrative interactions. New research shows how IDP enhances claim coding precision, thus decreasing medical coding errors that trigger payer claim rejections, resulting in faster authorization and payment rates.

Insured patients receive the Explanation of Benefits (EOB) from their insurance companies as a routine document included in their insurance plan. EOBs explain the payments made, the amounts not paid, and the supporting rationales. IDP facilitates efficient processing of incoming EOBs while insurers act as secondary payers, although we will concentrate on the primary workflow.

Ensuring Compliance with HIPAA and Data Security

All healthcare solutions must comply with HIPAA regulations. AWS services Textract and Comprehend Medical have HIPAA eligibility status, making them suitable for Business Associate Agreement implementations to process protected health information. The IDP system adopts a dual approach to data security by implementing encryption for at-rest data, including medical documents stored in S3 buckets and results in DynamoDB, alongside access-control mechanisms. The system maintains detailed logs of every access to the data. A computerized system reduces human interactions, thereby decreasing the chances of accidental information disclosure. The PHI identification capabilities of IDP simplify de-identification tasks for organizations. Anonymized datasets are produced by using the pipeline to remove names and IDs from documents during analytical or research activities. This process runs more smoothly than manual data anonymization procedures.

Enhancing Patient Experience with IDP

Patients recognize the benefits provided by IDP through its behind-the-scenes efforts. The administrative process accelerates when patients have to complete fewer forms, as existing data can automatically populate them, thereby reducing processing time. The swift approval of insurance claims, which can happen within days or even hours, is facilitated by IDP, eliminating delays associated with paper-based processing.

Through the healthcare use case, IDP demonstrates its capability to optimize health information document processing and insurance claim processing. Medical organizations benefit from IDP by extracting information from paper documents, which enables them to deliver improved care alongside accelerated financial transactions. Healthcare professionals can instantly find essential medical details without relying on documentation, while insurers can process large volumes of claims at high speed. The healthcare sector reduces substantial administration expenses and ensures better compliance with medical record privacy rules, providing enhanced service delivery to patients and healthcare providers. Thus, the healthcare sector requires IDP as a core solution to create digital procedures that support connectivity goals while advancing data analytics for healthcare.

Implementation Considerations

Implementing an Intelligent Document Processing solution in a real-world environment requires careful planning beyond just the core workflow. In this section, we discuss important considerations to ensure the solution is secure, compliant, well-architected, and maintainable. We cover data privacy and regulatory compliance (like GDPR and HIPAA), deployment strategies for the AWS infrastructure and code, and ongoing monitoring and optimization of the system.

Data Privacy and Compliance

Any system that processes business documents will likely handle sensitive information –personal data, financial details, or confidential business information. Ensuring data privacy and regulatory compliance is essential.

Access Control & Security

AWS users must enable the principle of least privilege by combining IAM roles and policies. Lambda functions should only access the S3 buckets and AWS resources that align with their operational needs. The Textract Lambda function is granted permission to read from the input S3 bucket while excluding other buckets from its access scope. The DynamoDB write Lambda function is restricted to adding items only to its designated table. To maintain AWS internal traffic instead of public internet communication, you can use VPC endpoints or PrivateLink to connect S3 and Bedrock services. All service-to-service data transfers (S3 to Lambda and Lambda to Textract) are protected by AWS encryption, and you can establish TLS 1.2+ encryption requirements for external data exchanges.

Encryption

Server-side encryption using AES-256 or AWS KMS encryption must be enabled on all S3 buckets to safeguard document contents while at rest. The combination of AWS Key Management Service (KMS) with customer-managed keys offers complete control over access to decrypted information for highly sensitive data. Encryption at rest should also be implemented for both DynamoDB and RDS databases. The AWS encryption system secures all ephemeral storage, including /tmp, but sensitive information storage on disks should be minimized. Bedrock users must encrypt PII in prompts and utilize tokens for data anonymization unless they have data processing agreements with external API partners (such as OpenAI for ChatGPT).

Data Minimization

Store and keep only the necessary data. The lack of document image requirements after processing should result in non-storage (with an optional deletion schedule) of the image. After extraction, the structured data should be preserved in access-controlled secured storage along with the original document. The risk exposure will decrease in the event of any security breach. The storage of personal data, in accordance with GDPR requirements, must be limited to the necessary duration for its original purpose of collection. The implementation of retention policies through AWS S3 Object Lifecycle rules enables automatic data deletion or transition to colder storage spaces after X days. When DynamoDB entries achieve their purpose, they can be deleted from the system.

Compliance Standards

The solution must comply with GDPR regulations when operating in European jurisdictions or other regions. Users should have the ability to request data deletion (the right to be forgotten), while the system should possess a storage architecture that facilitates locating all data related to an individual, enabling complete removal. HIPAA regulations apply to healthcare, and AWS offers Textract and Comprehend Medical services that are HIPAA-eligible, allowing healthcare organizations to sign Business Associate Agreements (BAA) to utilize these services for PHI. Audit controls and access management responsibilities remain in effect for you despite these obligations. You should enable AWS CloudTrail to log all API calls that identify users and documents while also implementing Amazon CloudWatch Logs to capture your application logs for auditing purposes.

Human Review Process Security

Through Amazon A2I human review, you can choose either your own employees or external third-party vendors to provide the review service. Reviewing PHI or highly sensitive data requires internal employees who have completed HIPAA training or equivalent courses. The A2I configuration allows for the use of a private workforce, and all reviewer actions are logged. Users should operate through the provided AWS UI interface, which displays only the essential data needed to make their decisions. Design tasks to furnish reviewers with only the necessary information; for example, when validation is all that is needed for a field, reviewers should receive only the relevant snippet instead of the entire document.

Output Sanitization

When incorporating uncontrolled generative AI (LLMs) via external APIs into your system, you should proceed with extreme caution. Using AWS Bedrock allows organizations to work with reputable models, including fine-tuned versions that process data entirely within the AWS environment, where information remains within the account boundaries. Amazon documentation states that Bedrock input data is not utilized for model training purposes and does not remain stored beyond the processing steps (this information is current as of now). Data transmission to a public API is less concerning than this approach. Using the OpenAI API through ChatGPT requires verification of policy compliance and should include PII protection by either obscuring names or using placeholder identifiers.

Compliance Certifications

The solution adheres to current best practices and industry standards throughout its development. The Security Pillar of the AWS Well-Architected Framework offers its own set of guidelines. The compliance framework of many AWS services within our stack aligns with ISO 27001 and SOC 2 standards, which aid in overall compliance audits. IDP automation ensures compliance through its data handling systems, generating detailed access logs and tracking to demonstrate transparency and accountability.

Regulatory Workflows

Certain business sectors require additional document screening procedures. Financial operations need sanction screening to verify specific text containing names that must match watchlists. The system enables this check integration through Comprehend name extraction followed by service or list validation. Some legal applications necessitate specific rules to determine document storage periods. The system design must incorporate mechanisms to add such compliance processes seamlessly.

The implementation of an IDP solution requires security and privacy features to be established at its foundational stage. The system can ensure both security for sensitive data and regulatory compliance through AWS encryption features, Identity Access Management protocols, and Virtual Private Cloud isolation methods, all while providing automation advantages. An automated system demonstrates stronger security features than human-driven processes because it safeguards data through minimized human interaction and operates within a monitored system environment.

Deployment Strategies

Building an IDP solution involves deploying numerous cloud resources (Lambdas, S3 buckets, queues, etc.) and writing custom code (for our Node.js Lambdas, for example). Adopting good deployment practices will make the system reliable and easier to manage as it evolves:

Infrastructure as Code (IaC)

The definition of your AWS infrastructure should utilize IaC tools such as AWS CloudFormation, AWS CDK (Cloud Development Kit), and Terraform. Employing IaC tools enables version control of your architectural design, ensuring consistent deployment across development, testing, and production environments. CloudFormation templates and CDK scripts allow you to create S3 buckets, SNS topics, SQS queues, DynamoDB tables, Lambda functions, along with their IAM roles and environment variables, as well as Step Functions state machines. An AWS CloudFormation stack created an IDP pipeline that established all required resources. Implementing Infrastructure as Code facilitates the creation of new solution instances across different AWS regions and business units with enhanced speed and reduced errors.

CI/CD for Code

A CI/CD pipeline should manage your Lambda functions, such as application code, by executing automated build and deployment processes. AWS Developer Tools, such as CodePipeline and CodeBuild, along with alternative solutions like Jenkins and GitLab CI, can fulfill this purpose. Updates to your Node.js code in Git repositories will trigger the pipeline to test your extraction logic and prompt formats before packaging for AWS deployment through the Serverless Application Model or CDK deployment. The quality check process allows you to deploy updates in controlled stages.

Experience Our Skilled Development Teams

Elevate Your Projects with Skilled Software Development Professionals

Get Your Development TeamConfiguration Management

The deployment of configuration values should be managed through AWS Systems Manager Parameter Store or Secrets Manager, including database connection strings, Bedrock API endpoints, and secret keys. Your Lambdas gain access to these values as they run. This approach prevents sensitive information from being embedded directly in the code while allowing configuration settings to be changed without altering the code itself. The parameter feature enables you to replace your Comprehend custom model ARN and adjust the confidence threshold directly from the code.

Environment Separation

Each stage of development, QA, and production requires its own AWS account or a separate logical environment structure. The separate deployment of test processes ensures they do not affect operational data. The IaC templates work identically to deploy code into development environments. The testing process requires synthetic documents along with redacted real ones to validate pipeline operations. Step Functions provides an attractive visual flow inspector that allows you to follow the program logic pathway during testing. The stack will demonstrate strong reliability after deployment to production.

Testing with Real Documents

Testing IDP solutions proves difficult because their operation relies on AI services within the system. You should create a set of sample documents along with their corresponding expected outputs to build your regression test suite. The pipeline should analyze your known samples each time you modify the code to ensure that no problems arise (for example, when you alter Textract JSON parsing to verify correct invoice total extraction). The prompt logic for the LLM requires several test cases to validate that it generates proper output structures.

Blue/Green or Canary Deployments

Updates to Lambdas or models should be deployed using canary methods, such as directing new code to handle a portion of traffic alongside the current version until successful testing confirms a full transition. AWS Lambda offers CodeDeploy functionality to perform both linear and canary deployments. This deployment method can be beneficial when updating LLM versions or modifying logic that requires monitoring selected documents prior to full implementation.

Scalability Settings

Adjust the Lambda concurrency limits based on specific needs. Lambdas will automatically scale up to manage traffic, but adjusting reserved concurrency and SQS batch sizes can help protect downstream services from being overwhelmed. The Step Function should throttle Textract requests, or the Lambdas should limit their parallel calls when Textract has account-wide job processing constraints. Deployment requires establishing these quotas and limits as detailed configuration points.

Cost Management During Deployment

The deployment process should consider costs at all times, especially during development. The services Textract and Bedrock operate on a pay-per-use basis. The development and testing phases require avoiding the continuous processing of 1,000-page documents. Small document sets or subsets should be used for testing purposes. AWS Cost Explorer, along with budgets, should be utilized to monitor expenses, particularly when new parts of the pipeline become active.

The IDP pipeline requires comprehensive software project management to establish automated deployment and testing capabilities throughout its lifecycle. This automated process reduces human errors while ensuring control over solution expansion through new document types and services. Modern DevOps practices align seamlessly with serverless IDP systems, even though server management is not required, as the system benefits from code and configuration automation along with version control.

Monitoring and Optimization

Once your IDP solution is up and running, you must monitor its performance and continually optimize it for reliability, speed, and cost. Here are key considerations:

Logging and Tracing

Every Lambda function must send essential events and errors to Amazon CloudWatch Logs. Document processing errors necessitate this capability for proper problem-solving. The logging system should include structured records by documenting document IDs and step names along with their error messages. AWS X-Ray allows for service request tracing, enabling developers to monitor a single document as it passes through S3 triggers and Lambda functions to Textract and the subsequent Lambda. This can assist in identifying bottlenecks. Each execution in Step Functions creates detailed history logs, which users can review to determine which step failed if problems occur.

Metrics and Alarms

Defining and collecting metrics that indicate the system’s health is essential. AWS Lambda automatically tracks invocations, errors, duration, etc. Key metrics to watch:

- Error rates on Lambdas (if a function fails frequently, something’s wrong with either input assumptions or an external call).

- Textract job errors (if Textract returns an error for a file, maybe the file is too large or has an unsupported format; those should be logged and counted).

- Processing latency – you might measure end-to-end time from document upload to completion. You can glean this by timestamps or by instrumenting the code to emit a custom metric (CloudWatch custom metric) for total pipeline time.

- Queue length for SQS: If the queue begins to back up (length increasing), downstream processing can’t keep up. This could occur if there are volume spikes or if a downstream service slows down. You should set an alarm for QueueDepth > some threshold for X minutes to investigate (perhaps increase concurrency or identify the blocker).

- Throughput – how many documents per hour/day are being processed, and is that meeting expectations?

- Human review percentage – track how many documents go to A2I. If that percentage is high, the AI is struggling (maybe the model needs improvement, or there’s a new document pattern). This can be a KPI to optimize over time (you’d like it to decrease as the system learns).

Set up Amazon CloudWatch Alarms for critical metrics. For instance, create an alarm if any Lambda has an error rate exceeding 5% within a 5-minute window or if end-to-end latency surpasses, for example, 1 hour for a document (possibly indicating something is stuck). Configure notifications for alarms (SNS email to DevOps, etc.).

Performance Tuning

Optimize the Lambdas and workflow for speed where possible:

- AWS functions perform better when appropriate memory is allocated, as higher memory amounts offer additional CPU resources for the function. The processing intensity of OCR and NLP tasks rises when post-processing results are considered. A slowly running Lambda or one that reaches its timeout should be allocated more memory since this increase can provide a speedup that may reduce the duration cost.

- Utilize concurrent execution effectively. The asynchronous jobs in Textract allow for the simultaneous processing of multiple pages through parallel operations. Step Functions enable parallel document processing via state machine executions or the built-in Map state feature for parallel tasks.

- Reference data requiring repeated retrieval should be cached using Lambda memory, DynamoDB, or ElastiCache when additional storage is necessary for quick access. The process of validating hospital codes on claims becomes faster by using DynamoDB to retrieve the list rather than making external calls repeatedly.

- The optimization of LLM prompts should remain concise, as this minimizes token usage and subsequently reduces both cost and latency. Evaluate whether combining multiple tasks into a single prompt would lower your API request count. Complexity must be balanced during system design since individual simple requests may provide better management than complex combined calls.

Experience Our Research & Development Expertise

R&D-Led Software Development Integrates Innovation into Every Product Detail

Learn About R&D ServicesCost Optimization

Serverless architectures are cost-efficient by design (you pay per use), but costs can still add up with high volumes, especially from AI service usage (Textract, Bedrock, Comprehend API calls each have a cost). Monitor the AWS Cost Explorer to see which services incur the most cost.

- The cost structure of Textract is based on the number of pages processed, so check for any empty or unnecessary pages to make your spending more efficient. The pricing model of Textract operates differently depending on whether users require plain text extraction or form/table analysis. The more affordable API should be used for documents that do not need form analysis.

- The Comprehend service charges customers based on the number of characters that need analysis. Verify whether it is necessary to run Comprehend on the entire text of documents when they exceed normal lengths. The method could begin with document classification, followed by Medical Comprehend processing only for documents that meet the medical criteria.

- Bedrock/LLM calls are among the most costly operations in the system regarding model selection and usage patterns. Utilize these services only when they add value. Employ an LLM after exploring simpler rules and Comprehend, especially if they fail to yield the desired results. Smaller model sizes combined with effective prompting methods should be used to minimize response dimensions. Choose the appropriate ChatGPT API model, as GPT-4 offers greater capabilities, but its cost is higher than that of GPT-3.5.

- Lambda costs are mostly related to duration. Moving Lambda functions that perform slow JSON parsing to a faster programming language or alternative method might lead to cost savings (but Node is still acceptable, provided you optimize slow execution points).

- Free tier and savings: During initial development, leverage AWS free tier allowances for Lambdas, Comprehend, etc. The steady volume of production work permits you to use savings plans for Lambda or Comprehend to achieve cost efficiency.

Model and System Evolution

The types of documents, alongside their presentation format, may transform throughout successive periods. The evaluation of output quality should be conducted periodically. A system should exist to collect user feedback from end users who discover particular fields that tend to contain errors. The received feedback enables the retraining of Comprehend custom models and adjustments to LLM prompts. The human review stage corrections should be aggregated, as this data provides valuable insights for system enhancement. You should update your custom classifier every few months by incorporating misclassified examples that have since been corrected. The extraction model needs modification to improve performance when processing invoices from new vendors.

Fail-Safe and Recovery

Even with the most diligent approach to system management, failures can still occur due to regional outages or software bugs. Design systems with idempotency in mind so that processing steps are repeatable without causing any damage. The failure of a Lambda function at the midpoint raises questions about duplicate document creation when reprocessing the document. A document status flag that indicates processing or completed statuses in the database system prevents duplicate entries. The system should utilize Dead Letter Queues (DLQ) for both SQS and Lambda. Unsuccessful messages will be placed into Dead Letter Queues (DLQs), which administrators can inspect manually. The system should include a reprocessing mechanism alongside a script that allows you to retrieve failed documents from the DLQ after fixing bugs.

User Dashboard and Reporting

The system requires a basic dashboard that displays processing metrics such as document volume, completion speed, and human intervention counts for business visibility. Analyzing DynamoDB data can be achieved via Amazon CloudWatch or QuickSight tools, which offer visualization capabilities. Automation performance becomes apparent to business owners through visibility measures. The system provides evidence for ROI calculations by demonstrating how many labor hours it saved and the number of documents it processed automatically.

Your IDP solution benefits from monitoring and optimization practices that ensure its long-term operational reliability, efficiency, and cost-effectiveness. AWS provides CloudWatch and X-Ray, among other tools, which form a robust system for observing active operations. The combination of proactive system tuning and metric observation allows you to enhance performance levels continuously. The system achieves maximum uptime, performs operations faster, and maintains lower costs while identifying problems before they impact end-users. The IDP solution transitions to production status, operating as a dynamic system that evolves with user interactions, similar to essential software systems within your organization.

Unlocking Efficiency: The Future of Document Processing with IDP